unitGenerator

Create unsupervised image-to-image translation (UNIT) generator network

Since R2021a

Description

net = unitGenerator(inputSizeSource)net, for input of size

inputSizeSource. For more information about the network architecture,

see UNIT Generator Network. The network has two inputs and

four outputs:

The two network inputs are images in the source and target domains. By default, the target image size is same as source image size. You can change the number of channels in the target image by specifying the

NumTargetInputChannelsname-value argument.Two of the network outputs are self-reconstructed outputs, in other words, source-to-source and target-to-target translated images. The other two network outputs are the source-to-target and target-to-source translated images.

This function requires Deep Learning Toolbox™.

net = unitGenerator(inputSizeSource,Name=Value)

Examples

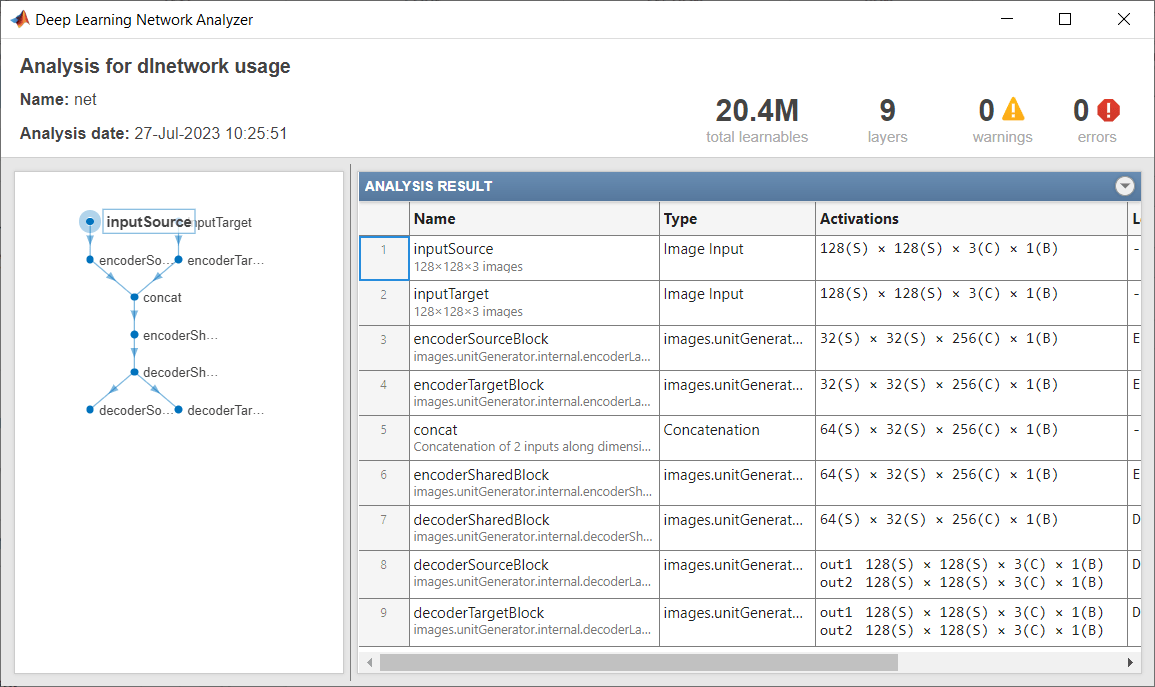

Specify the network input size for RGB images of size 128-by-128.

inputSize = [128 128 3];

Create a UNIT generator that generates RGB images of the input size.

net = unitGenerator(inputSize);

Display the network.

analyzeNetwork(net)

Specify the network input size for RGB images of size 128-by-128 pixels.

inputSize = [128 128 3];

Create a UNIT generator with five residual blocks, three of which are shared between the encoder and decoder modules.

net = unitGenerator(inputSize,NumResidualBlocks=5, ...

NumSharedBlocks=3);Display the network.

analyzeNetwork(net)

Input Arguments

Input size of the source image, specified as a 3-element vector of positive

integers. inputSizeSource has the form [H

W

C], where H is the height,

W is the width, and C is the number of

channels. The length of each dimension must be evenly divisible by

2^NumDownsamplingBlocks.

Name-Value Arguments

Specify optional pairs of arguments as

Name1=Value1,...,NameN=ValueN, where Name is

the argument name and Value is the corresponding value.

Name-value arguments must appear after other arguments, but the order of the

pairs does not matter.

Example: net = unitGenerator(inputSizeSource,NumDownsamplingBlocks=3)

creates a network with three downsampling blocks.

Before R2021a, use commas to separate each name and value, and enclose

Name in quotes.

Example: net =

unitGenerator(inputSizeSource,"NumDownsamplingBlocks",3) creates a network with

three downsampling blocks.

Number of downsampling blocks in the source encoder and target encoder

subnetworks, specified as a positive integer. In total, the encoder module downsamples

the source and target input by a factor of

2^NumDownsamplingBlocks. The source decoder and target decoder

subnetworks have the same number of upsampling blocks.

Number of residual blocks in the encoder module, specified as a positive integer. The decoder module has the same number of residual blocks.

Number of residual blocks in the shared encoder subnetwork, specified as a positive integer. The shared decoder subnetwork has the same number of residual blocks. The network should contain at least one shared residual block.

Number of channels in the target image, specified as a positive integer. By

default, NumTargetChannels is the same as the number of channels

in the source image, inputSizeSource.

Number of filters in the first convolution layer, specified as a positive even integer.

Filter size in the first and last convolution layers of the network, specified as a positive odd integer or 2-element vector of positive odd integers of the form [height width]. When you specify the filter size as a scalar, the filter has equal height and width.

Filter size in intermediate convolution layers, specified as a positive odd integer or 2-element vector of positive odd integers of the form [height width]. The intermediate convolution layers are the convolution layers excluding the first and last convolution layer. When you specify the filter size as a scalar, the filter has identical height and width.

Style of padding used in the network, specified as one of these values.

PaddingValue | Description | Example |

|---|---|---|

| Numeric scalar | Pad with the specified numeric value |

|

"symmetric-include-edge" | Pad using mirrored values of the input, including the edge values |

|

"symmetric-exclude-edge" | Pad using mirrored values of the input, excluding the edge values |

|

"replicate" | Pad using repeated border elements of the input |

|

Method used to upsample activations, specified as one of these values:

"transposedConv"— Use atransposedConv2dLayer(Deep Learning Toolbox) with a stride of [2 2]."bilinearResize"— Use aconvolution2dLayer(Deep Learning Toolbox) with a stride of [1 1] followed by aresize2dLayerwith a scale of [2 2]."pixelShuffle"— Use aconvolution2dLayer(Deep Learning Toolbox) with a stride of [1 1] followed by adepthToSpace2dLayerwith a block size of [2 2].

Data Types: char | string

Weight initialization used in convolution layers, specified as

"glorot", "he",

"narrow-normal", or a function handle. For more information, see

Specify Custom Weight Initialization Function (Deep Learning Toolbox).

Activation function to use in the network except after the first and final

convolution layers, specified as one of these values. The

unitGenerator function automatically adds a leaky ReLU layer

after the first convolution layer. For more information and a list of available

layers, see Activation Layers (Deep Learning Toolbox).

"relu"— Use areluLayer(Deep Learning Toolbox)"leakyRelu"— Use aleakyReluLayer(Deep Learning Toolbox) with a scale factor of 0.2"elu"— Use aneluLayer(Deep Learning Toolbox)A layer object

Activation function after the final convolution layer in the source decoder, specified as one of these values. For more information and a list of available layers, see Activation Layers (Deep Learning Toolbox).

"tanh"— Use atanhLayer(Deep Learning Toolbox)"sigmoid"— Use asigmoidLayer(Deep Learning Toolbox)"softmax"— Use asoftmaxLayer(Deep Learning Toolbox)"none"— Do not use a final activation layerA layer object

Activation function after the final convolution layer in the target decoder, specified as one of these values. For more information and a list of available layers, see Activation Layers (Deep Learning Toolbox).

"tanh"— Use atanhLayer(Deep Learning Toolbox)"sigmoid"— Use asigmoidLayer(Deep Learning Toolbox)"softmax"— Use asoftmaxLayer(Deep Learning Toolbox)"none"— Do not use a final activation layerA layer object

Output Arguments

UNIT generator network, returned as a dlnetwork (Deep Learning Toolbox) object.

More About

A UNIT generator network consists of three subnetworks in an encoder module followed by three subnetworks in a decoder module. The default network follows the architecture proposed by Liu, Breuel, and Kautz [1].

The encoder module downsamples the input by a factor of

2^NumDownsamplingBlocks. The encoder module consists of three subnetworks.

The source encoder subnetwork, called 'encoderSourceBlock', has an initial block of layers that accepts data in the source domain, XS. The subnetwork then has

NumDownsamplingBlocksdownsampling blocks that downsample the data andNumResidualBlocks–NumSharedBlocksresidual blocks.The target encoder subnetwork, called 'encoderTargetBlock', has an initial block of layers that accepts data in the target domain, XS. The subnetwork then has

NumDownsamplingBlocksdownsampling blocks that downsample the data, andNumResidualBlocks–NumSharedBlocksresidual blocks.The output of the source encoder and target encoder are combined by a

concatenationLayer(Deep Learning Toolbox)The shared residual encoder subnetwork, called 'encoderSharedBlock', accepts the concatenated data and has

NumSharedBlocksresidual blocks.

The decoder module consists of three subnetworks that perform a total of

NumDownsamplingBlocks upsampling operations on the data.

The shared residual decoder subnetwork, called 'decoderSharedBlock', accepts data from the encoder and has

NumSharedBlocksresidual blocks.The source decoder subnetwork, called 'decoderSourceBlock', has

NumResidualBlocks–NumSharedBlocksresidual blocks,NumDownsamplingBlocksdownsampling blocks that downsample the data, and a final block of layers that returns the output. This subnetwork returns two outputs in the source domain: XTS and XSS. The output XTS is an image translated from the target domain to the source domain. The output XSS is a self-reconstructed image from the source domain to the source domain.The target decoder subnetwork, called 'decoderTargetBlock', has

NumResidualBlocks–NumSharedBlocksresidual blocks,NumDownsamplingBlocksdownsampling blocks that downsample the data, and a final block of layers that returns the output. This subnetwork returns two outputs in the target domain: XST and XTT. The output XTS is an image translated from the source domain to the target domain. The output XTT is a self-reconstructed image from the target domain to the target domain.

The table describes the blocks of layers that comprise the subnetworks.

| Block Type | Layers | Diagram of Default Block |

|---|---|---|

| Initial block |

|

|

| Downsampling block |

|

|

| Residual block |

|

|

| Upsampling block |

|

|

| Final block |

|

|

Tips

You can create the discriminator network for UNIT by using the

patchGANDiscriminatorfunction.Train the UNIT GAN network using a custom training loop.

To perform domain translation of source image to target image and vice versa, use the

unitPredictfunction.For shared latent feature encoding, the arguments

NumSharedBlocksandNumResidualBlocksmust be greater than 0.

References

[1] Liu, Ming-Yu, Thomas Breuel, and Jan Kautz. "Unsupervised Image-to-Image Translation Networks." Advances in Neural Information Processing Systems 30 (NIPS 2017). Long Beach, CA: 2017. https://arxiv.org/abs/1703.00848.

Version History

Introduced in R2021a

MATLAB Command

You clicked a link that corresponds to this MATLAB command:

Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

웹사이트 선택

번역된 콘텐츠를 보고 지역별 이벤트와 혜택을 살펴보려면 웹사이트를 선택하십시오. 현재 계신 지역에 따라 다음 웹사이트를 권장합니다:

또한 다음 목록에서 웹사이트를 선택하실 수도 있습니다.

사이트 성능 최적화 방법

최고의 사이트 성능을 위해 중국 사이트(중국어 또는 영어)를 선택하십시오. 현재 계신 지역에서는 다른 국가의 MathWorks 사이트 방문이 최적화되지 않았습니다.

미주

- América Latina (Español)

- Canada (English)

- United States (English)

유럽

- Belgium (English)

- Denmark (English)

- Deutschland (Deutsch)

- España (Español)

- Finland (English)

- France (Français)

- Ireland (English)

- Italia (Italiano)

- Luxembourg (English)

- Netherlands (English)

- Norway (English)

- Österreich (Deutsch)

- Portugal (English)

- Sweden (English)

- Switzerland

- United Kingdom (English)