vSLAM

시각 기반의 동시적 위치추정 및 지도작성(vSLAM)은 주변 환경에 대하여 지도를 작성하면서 동시에 이 환경을 기준으로 카메라의 위치와 방향을 계산하는 과정을 가리킵니다. 이 과정에는 카메라로 획득하는 시각적 입력만 사용됩니다. 시각 기반의 SLAM의 응용 분야에는 증강현실, 로봇공학, 자율주행 등이 있습니다. 시각-관성 SLAM(viSLAM)은 카메라에서 얻은 시각적 입력을 IMU의 위치 데이터와 융합하여 SLAM 결과를 개선하는 과정입니다. 자세한 내용은 Implement Visual SLAM in MATLAB 항목을 참조하십시오.

함수

detectSURFFeatures | SURF 특징 검출 |

detectORBFeatures | Detect ORB keypoints |

extractFeatures | Extract interest point descriptors |

matchFeatures | 매칭되는 특징 찾기 |

matchFeaturesInRadius | Find matching features within specified radius (R2021a 이후) |

triangulate | 3-D locations of undistorted matching points in stereo images |

img2world2d | Determine world coordinates of image points (R2022b 이후) |

world2img | Project world points into image (R2022b 이후) |

estgeotform2d | Estimate 2-D geometric transformation from matching point pairs (R2022b 이후) |

estgeotform3d | Estimate 3-D geometric transformation from matching point pairs (R2022b 이후) |

estimateFundamentalMatrix | Estimate fundamental matrix from corresponding points in stereo images |

estworldpose | Estimate camera pose from 3-D to 2-D point correspondences (R2022b 이후) |

findWorldPointsInView | Find world points observed in view |

findWorldPointsInTracks | Find world points that correspond to point tracks |

estrelpose | Calculate relative rotation and translation between camera poses (R2022b 이후) |

optimizePoses | Optimize absolute poses using relative pose constraints |

createPoseGraph | Create pose graph |

bundleAdjustment | Adjust collection of 3-D points and camera poses |

bundleAdjustmentMotion | Adjust collection of 3-D points and camera poses using motion-only bundle adjustment |

bundleAdjustmentStructure | Refine 3-D points using structure-only bundle adjustment |

compareTrajectories | Compare estimated trajectory against ground truth (R2024b 이후) |

trajectoryErrorMetrics | Store accuracy metrics for trajectories (R2024b 이후) |

imshow | 이미지 표시 |

showMatchedFeatures | Display corresponding feature points |

plot | Plot image view set views and connections |

plotCamera | Plot camera in 3-D coordinates |

pcshow | Plot 3-D point cloud |

pcplayer | Visualize streaming 3-D point cloud data |

bagOfFeatures | Bag-of-visual-words 객체 |

bagOfFeaturesDBoW | Bag of visual words using DBoW2 library (R2024b 이후) |

dbowLoopDetector | Detect loop closure using visual features (R2024b 이후) |

imageviewset | Manage data for structure-from-motion, visual odometry, and visual SLAM |

worldpointset | Manage 3-D to 2-D point correspondences |

indexImages | Create image search index |

invertedImageIndex | Search index that maps visual words to images |

monovslam | Visual simultaneous localization and mapping (vSLAM) and visual-inertial sensor fusion with monocular camera (R2023b 이후) |

addFrame | Add image frame to visual SLAM object (R2023b 이후) |

hasNewKeyFrame | Check if new key frame added in visual SLAM object (R2023b 이후) |

checkStatus | Check status of visual SLAM object (R2023b 이후) |

isDone | End-of-file status (logical) |

mapPoints | Build 3-D map of world points (R2023b 이후) |

poses | Absolute camera poses of key frames (R2023b 이후) |

plot | Plot 3-D map points and estimated camera trajectory in visual SLAM (R2023b 이후) |

reset | Reset visual SLAM object (R2023b 이후) |

rgbdvslam | Feature-based visual simultaneous localization and mapping (vSLAM) and visual-inertial sensor fusion with RGB-D camera (R2024a 이후) |

addFrame | Add pair of color and depth images to RGB-D visual SLAM object (R2024a 이후) |

hasNewKeyFrame | Check if new key frame added in RGB-D visual SLAM object (R2024a 이후) |

checkStatus | Check status of visual RGB-D SLAM object (R2024a 이후) |

isDone | End-of-processing status for RGB-D visual SLAM object (R2024a 이후) |

mapPoints | Build 3-D map of world points from RGB-D vSLAM object (R2024a 이후) |

poses | Absolute camera poses of RGB-D vSLAM key frames (R2024a 이후) |

plot | Plot 3-D map points and estimated camera trajectory in RGB-D visual SLAM (R2024a 이후) |

reset | Reset RGB-D visual SLAM object (R2024a 이후) |

stereovslam | Feature-based visual simultaneous localization and mapping (vSLAM) and visual-inertial sensor fusion with stereo camera (R2024a 이후) |

addFrame | Add pair of color and depth images to stereo visual SLAM object (R2024a 이후) |

hasNewKeyFrame | Check if new key frame added in stereo visual SLAM object (R2024a 이후) |

checkStatus | Check status of stereo visual SLAM object (R2024a 이후) |

isDone | End-of-processing status for stereo visual SLAM object (R2024a 이후) |

mapPoints | Build 3-D map of world points from stereo vSLAM object (R2024a 이후) |

poses | Absolute camera poses of stereo key frames (R2024a 이후) |

plot | Plot 3-D map points and estimated camera trajectory in stereo visual SLAM (R2024a 이후) |

reset | Reset stereo visual SLAM object (R2024a 이후) |

도움말 항목

- Implement Visual SLAM in MATLAB

Understand the visual simultaneous localization and mapping (vSLAM) workflow and how to implement it using MATLAB.

- Choose SLAM Workflow Based on Sensor Data

Choose the right simultaneous localization and mapping (SLAM) workflow and find topics, examples, and supported features.

- Develop Visual SLAM Algorithm Using Unreal Engine Simulation (Automated Driving Toolbox)

Develop a visual simultaneous localization and mapping (SLAM) algorithm using image data from the Unreal Engine® simulation environment.

추천 예제

Simulate RGB-D Visual SLAM System with Cosimulation in Gazebo and Simulink

Simulates an RGB-D visual simultaneous localization and mapping (SLAM) system to estimate the camera poses using data from a mobile robot in Gazebo.

(ROS Toolbox)

- R2024b 이후

Performant Monocular Visual-Inertial SLAM

Use visual inputs from a camera and positional data from an IMU to perform viSLAM in real time.

- R2025a 이후

- 라이브 스크립트 열기

Monocular Visual-Inertial SLAM

Perform SLAM by combining images captured by a monocular camera with measurements from an IMU sensor.

Performant and Deployable Monocular Visual SLAM

Use visual inputs from a camera to perform vSLAM and generate multi-threaded C/C++ code.

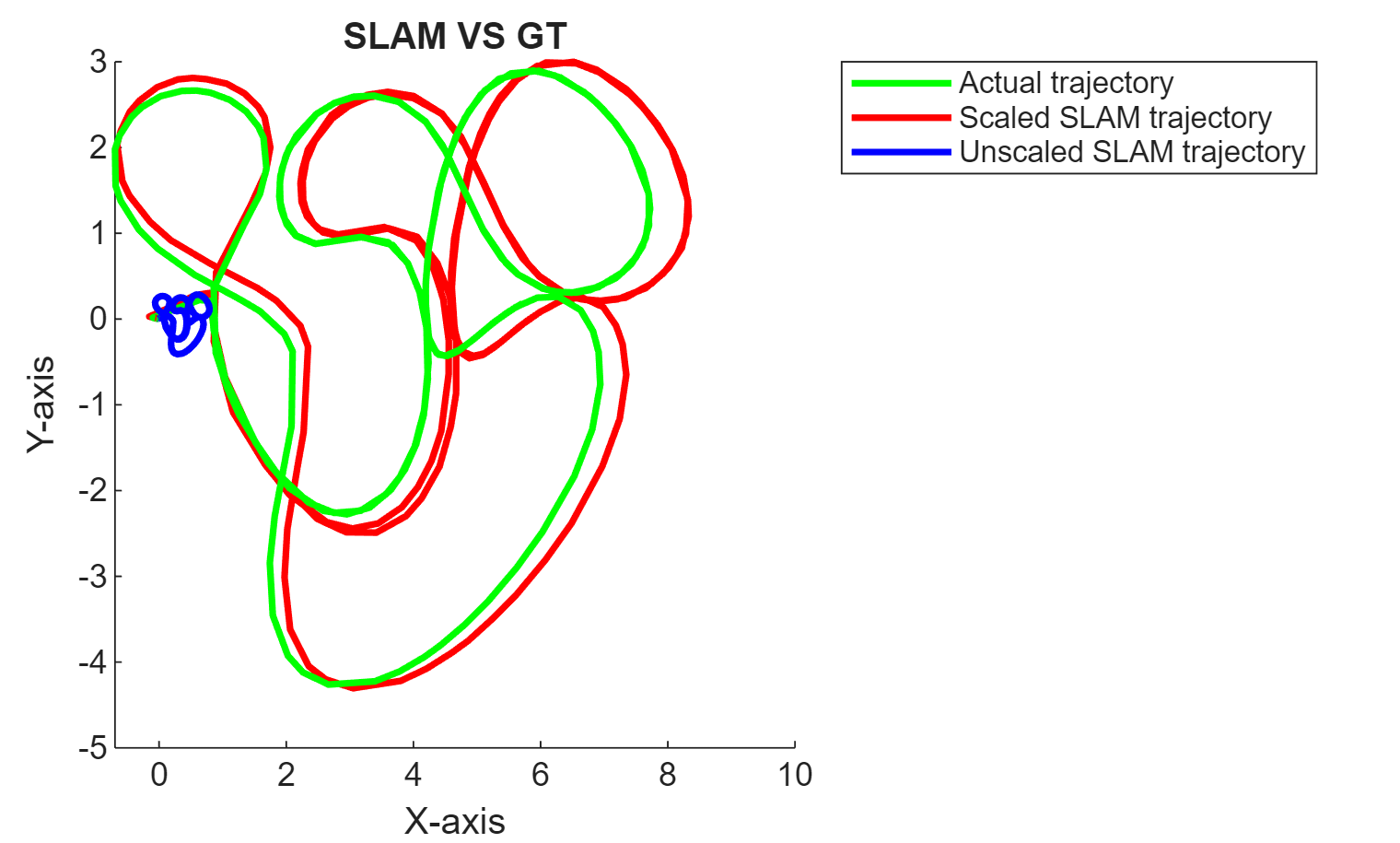

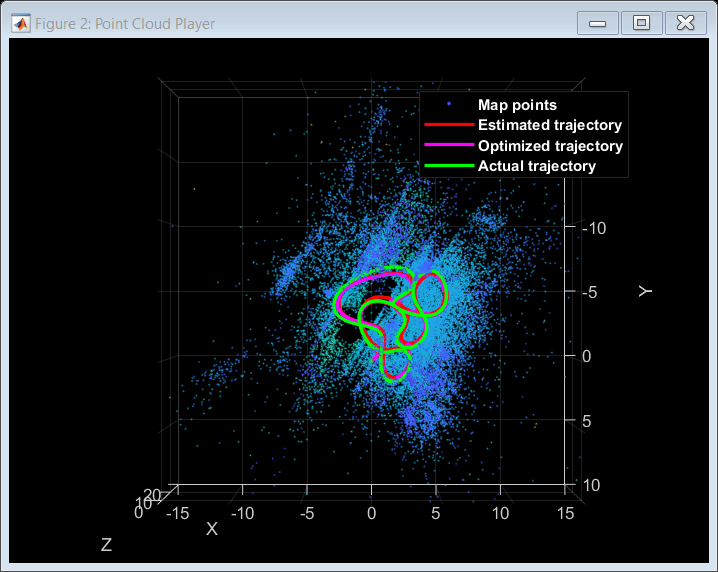

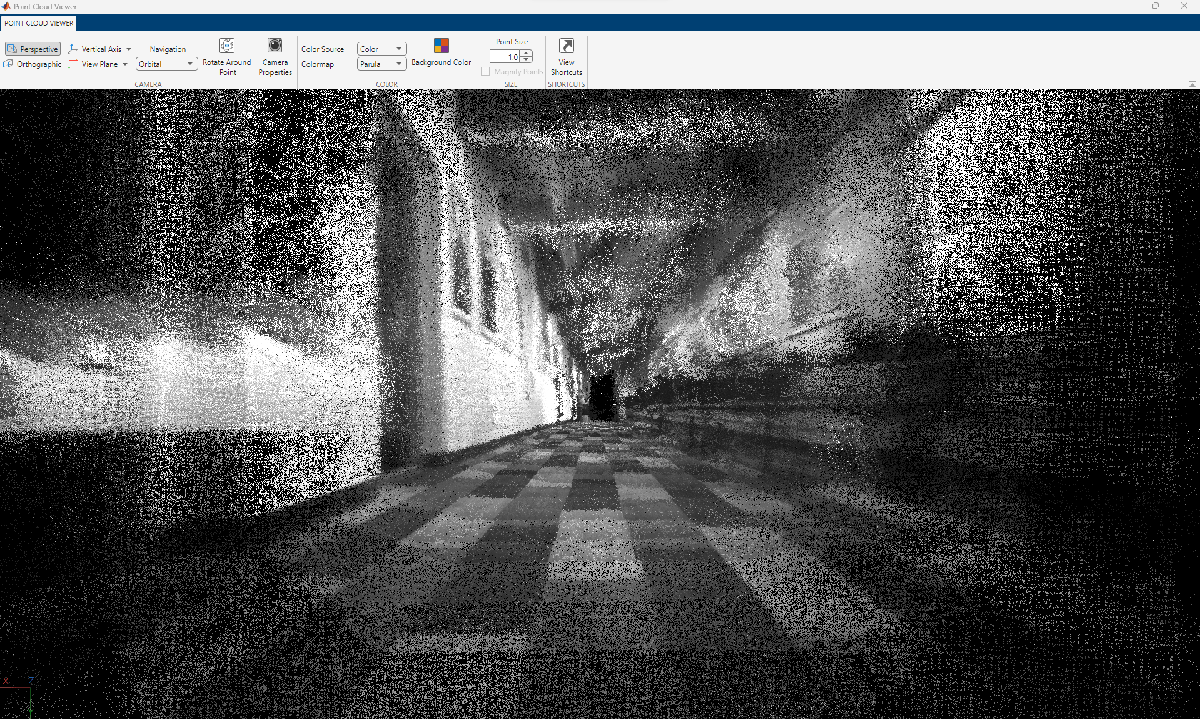

Monocular Visual Simultaneous Localization and Mapping

Visual simultaneous localization and mapping (vSLAM).

Performant and Deployable Stereo Visual SLAM with Fisheye Images

Use fisheye image data from a stereo camera to perform VSLAM and generate multi-threaded C/C++ code.

Stereo Visual Simultaneous Localization and Mapping

Process image data from a stereo camera to build a map of an outdoor environment and estimate the trajectory of the camera.

Build and Deploy Visual SLAM Algorithm with ROS in MATLAB

Implement and generate C ++ code for a vSLAM algorithm that estimates poses for the TUM RGB-D Benchmark and deploy as an ROS node to a remote device.

Visual Localization in a Parking Lot

Develop a visual localization system using synthetic image data from the Unreal Engine® simulation environment.

Stereo Visual SLAM for UAV Navigation in 3D Simulation

Develop a visual SLAM algorithm for a UAV equipped with a stereo camera.

Estimate Camera-to-IMU Transformation Using Extrinsic Calibration

Estimate SE(3) transformation to define spatial relationship between camera and IMU.

MATLAB Command

You clicked a link that corresponds to this MATLAB command:

Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

웹사이트 선택

번역된 콘텐츠를 보고 지역별 이벤트와 혜택을 살펴보려면 웹사이트를 선택하십시오. 현재 계신 지역에 따라 다음 웹사이트를 권장합니다:

또한 다음 목록에서 웹사이트를 선택하실 수도 있습니다.

사이트 성능 최적화 방법

최고의 사이트 성능을 위해 중국 사이트(중국어 또는 영어)를 선택하십시오. 현재 계신 지역에서는 다른 국가의 MathWorks 사이트 방문이 최적화되지 않았습니다.

미주

- América Latina (Español)

- Canada (English)

- United States (English)

유럽

- Belgium (English)

- Denmark (English)

- Deutschland (Deutsch)

- España (Español)

- Finland (English)

- France (Français)

- Ireland (English)

- Italia (Italiano)

- Luxembourg (English)

- Netherlands (English)

- Norway (English)

- Österreich (Deutsch)

- Portugal (English)

- Sweden (English)

- Switzerland

- United Kingdom (English)