detect

Syntax

Description

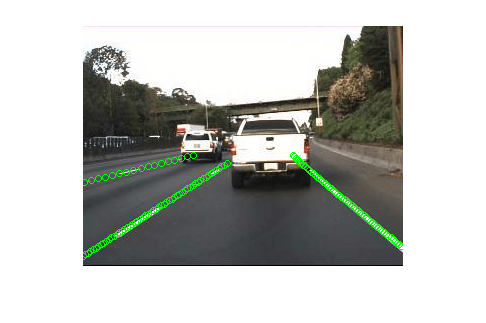

Detect Lane Boundaries in Image Coordinate System

lanePoints = detect(detector,I)I, using a

laneBoundaryDetector object, detector. The function

returns the lane boundary points detected in the input image as a set of pixel

coordinates, lanePoints.

lanePoints

= detect(detector,batch)batch.

lanePoints

= detect(detector,imds)ImageDatastore

object imds.

lanePoints

= detect(___,Name=Value)DetectionThreshold="0.2" sets the lane detection

score threshold to 0.2.

[

additionally returns confidence scores, lanePoints,scores] = detect(___)scores, for detected lanes in

images.

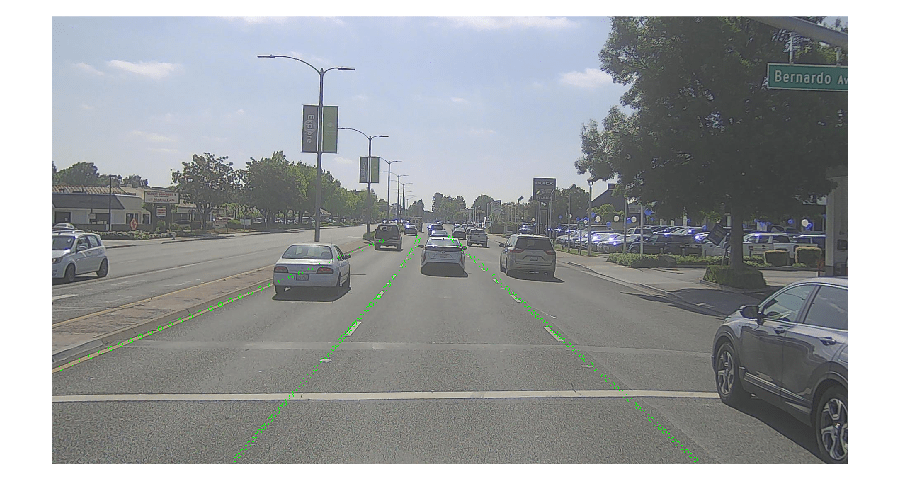

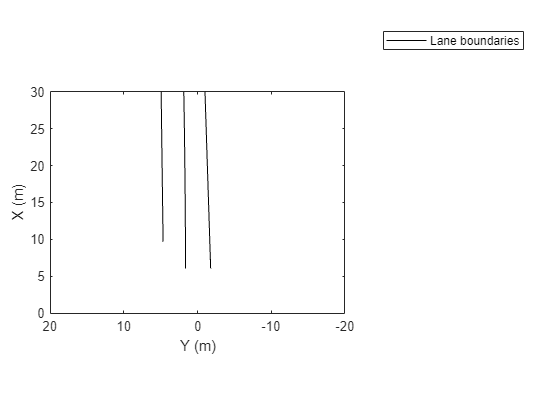

Detect Lane Boundaries in Vehicle Coordinate System

[

detects and returns the lane boundary points lanePointsVehicle,laneBoundaries]

= detect(detector,I,sensor)lanePointsVehicle in the

vehicle coordinate system by using the monoCamera object

sensor. This object function also returns lane boundaries,

laneBoundaries.

[

detects lane boundary points for the batch of images lanePointsVehicle,laneBoundaries]

= detect(detector,batch,sensor)batch.

[

detects lane boundary points for a series of images associated with an

lanePointsVehicle,laneBoundaries]

= detect(detector,imds,sensor)ImageDatastore object, imds.

[

specifies options using one or more name-value arguments in addition to any combination of

arguments from the previous three syntaxes. For example,

lanePointsVehicle,laneBoundaries] = detect(___,Name=Value)ExecutionEnvironment="cpu" uses the hardware resource as CPU to

execute the function.

Note: This feature also requires the Deep Learning Toolbox™ and the Deep Learning Toolbox Converter for ONNX™ Model Format support package. You can install the Deep Learning Toolbox Converter for ONNX Model Format support packages from the Add-On Explorer. For more information about installing add-ons, see Get and Manage Add-Ons.

Examples

Input Arguments

Name-Value Arguments

Output Arguments

References

[1] Hesai and Scale. PandaSet. Accessed September 18, 2025. https://pandaset.org/. The PandaSet data set is provided under the CC-BY-4.0 license.