양자화, 사영, 가지치기

양자화, 사영 또는 가지치기를 수행하여 심층 신경망 압축

Deep Learning Toolbox™와 함께 Deep Learning Toolbox Model Compression Library 지원 패키지를 사용하여 심층 신경망의 메모리 사용량과 계산 요구 사항을 줄이려면 다음과 같이 하십시오.

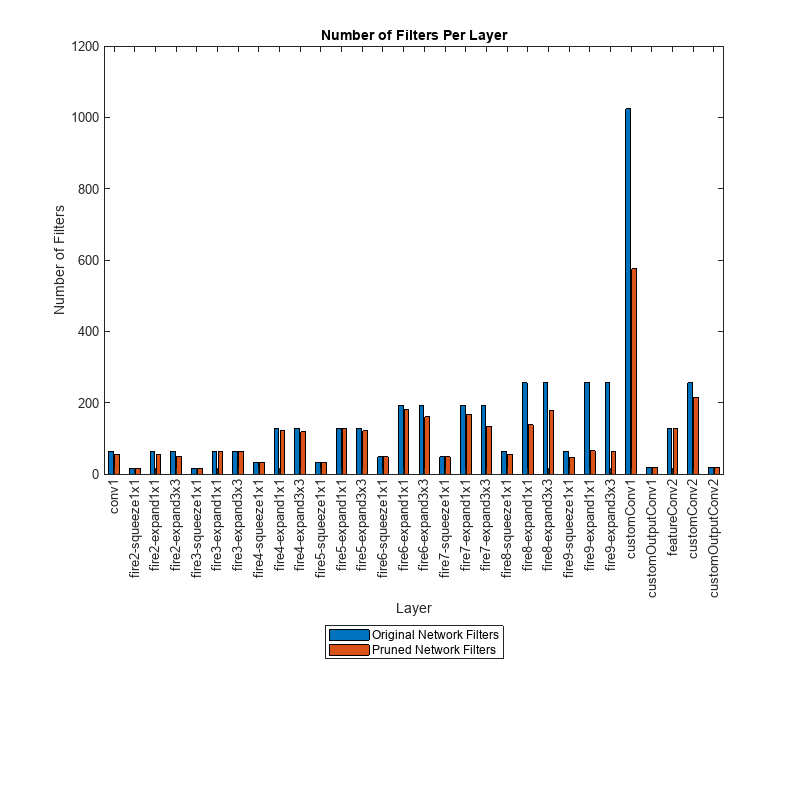

1차 테일러 근사를 사용하여 컨벌루션 계층에서 필터를 가지치기합니다. 그런 다음 가지치기된 신경망에서 C/C++ 또는 CUDA® 코드를 생성할 수 있습니다.

훈련 데이터를 대표하는 데이터 세트를 사용하여 계층 활성화 부분에 주성분 분석(PCA)을 수행하여 계층을 사영하고, 계층의 학습 가능 파라미터에 선형 사영을 적용합니다. 라이브러리가 없는 C/C++ 코드 생성을 사용하여 임베디드 하드웨어에 신경망을 배포하는 경우에는 사영된 심층 신경망을 순방향 통과시키는 것이 일반적으로 더 빠릅니다.

계층의 가중치, 편향 및 활성화를 정수 데이터형으로 스케일링한 낮은 정밀도로 양자화합니다. 그런 다음 양자화된 이러한 신경망에서 GPU, FPGA 또는 CPU 배포용 C/C++, CUDA 또는 HDL 코드를 생성할 수 있습니다.

심층 신경망 디자이너 앱을 사용하여 압축을 위해 신경망을 분석합니다.

Deep Learning Toolbox Model Compression Library에서 사용 가능한 압축 기법에 대한 자세한 개요는 Reduce Memory Footprint of Deep Neural Networks 항목을 참조하십시오.

함수

앱

| 심층 신경망 양자화기 | Quantize deep neural network to 8-bit scaled integer data types |

도움말 항목

개요

- Reduce Memory Footprint of Deep Neural Networks

Learn about neural network compression techniques, including pruning, projection, and quantization.

가지치기

- Analyze and Compress 1-D Convolutional Neural Network

Analyze 1-D convolutional network for compression and compress it using Taylor pruning and projection. (R2024b 이후) - Parameter Pruning and Quantization of Image Classification Network

Use parameter pruning and quantization to reduce network size. - Prune Image Classification Network Using Taylor Scores

This example shows how to reduce the size of a deep neural network using Taylor pruning. - Prune Filters in a Detection Network Using Taylor Scores

This example shows how to reduce network size and increase inference speed by pruning convolutional filters in a you only look once (YOLO) v3 object detection network. - Prune and Quantize Convolutional Neural Network for Speech Recognition

Compress a convolutional neural network (CNN) to prepare it for deployment on an embedded system.

사영 및 지식 증류

- Compress Neural Network Using Projection

This example shows how to compress a neural network using projection and principal component analysis. - Evaluate Code Generation Inference Time of Compressed Deep Neural Network

This example shows how to compare the inference time of a compressed deep neural network for battery state of charge estimation. (R2023b 이후) - Train Smaller Neural Network Using Knowledge Distillation

This example shows how to reduce the memory footprint of a deep learning network by using knowledge distillation. (R2023b 이후)

양자화

- Quantization of Deep Neural Networks

Overview of the deep learning quantization tools and workflows. - Data Types and Scaling for Quantization of Deep Neural Networks

Understand effects of quantization and how to visualize dynamic ranges of network convolution layers. - Quantization Workflow Prerequisites

Products required for the quantization of deep learning networks. - Supported Layers for Quantization

Deep neural network layers that are supported for quantization. - Prepare Data for Quantizing Networks

Supported datastores for quantization workflows. - Quantize Multiple-Input Network Using Image and Feature Data

Quantize Multiple Input Network Using Image and Feature Data - Export Quantized Networks to Simulink and Generate Code

Export a quantized neural network to Simulink and generate code from the exported model.

GPU 타깃을 위한 양자화

- Generate INT8 Code for Deep Learning Networks (GPU Coder)

Quantize and generate code for a pretrained convolutional neural network. - Quantize Residual Network Trained for Image Classification and Generate CUDA Code

This example shows how to quantize the learnable parameters in the convolution layers of a deep learning neural network that has residual connections and has been trained for image classification with CIFAR-10 data. - Quantize Layers in Object Detectors and Generate CUDA Code

This example shows how to generate CUDA® code for an SSD vehicle detector and a YOLO v2 vehicle detector that performs inference computations in 8-bit integers for the convolutional layers. - Quantize Semantic Segmentation Network and Generate CUDA Code

Quantize Convolutional Neural Network Trained for Semantic Segmentation and Generate CUDA Code

FPGA 타깃을 위한 양자화

- Quantize Network for FPGA Deployment (Deep Learning HDL Toolbox)

Reduce the memory footprint of a deep neural network by quantizing the weights, biases, and activations of convolution layers to 8-bit scaled integer data types. - Classify Images on FPGA Using Quantized Neural Network (Deep Learning HDL Toolbox)

This example shows how to use Deep Learning HDL Toolbox™ to deploy a quantized deep convolutional neural network (CNN) to an FPGA. - Classify Images on FPGA by Using Quantized GoogLeNet Network (Deep Learning HDL Toolbox)

This example shows how to use the Deep Learning HDL Toolbox™ to deploy a quantized GoogleNet network to classify an image.

CPU 타깃을 위한 양자화

- Generate int8 Code for Deep Learning Networks (MATLAB Coder)

Quantize and generate code for a pretrained convolutional neural network. - Generate INT8 Code for Deep Learning Network on Raspberry Pi (MATLAB Coder)

Generate code for deep learning network that performs inference computations in 8-bit integers. - Compress Image Classification Network for Deployment to Resource-Constrained Embedded Devices

This example shows how to reduce the memory footprint and computation requirements of an image classification network for deployment on resource constrained embedded devices such as the Raspberry Pi™.