Automate Labeling of Objects in Video Using RAFT Optical Flow

This example shows how to automatically transfer polygon region-of-interest (ROI) annotations from one labeled frame to subsequent frames using the RAFT deep learning-based optical flow algorithm.

Optical flow plays a crucial role in enhancing the capabilities of automated video labeling systems by providing detailed motion information from one frame to the next. This information allows for accurately and efficiently propagating dense per-pixel labels, such as instance segmentation masks around objects, to subsequent frames. Human labeling effort is required only to specify the labels on the first frame of a video, while the label propagation using optical flow is able to transfer these labels to subsequent video frames without requiring human intervention.

This example uses a pretrained RAFT optical flow estimation network, a specialized convolutional neural network (CNN) for optical flow estimation [1][2], to propagate a predefined polygon ROI around an object of interest from one frame to the next in a video sequence. This process involves estimating the optical flow and applying post-processing techniques to refine the propagated ROI. The refined ROI then is propagated across multiple consecutive frames, with the results visualized for evaluation. Finally, the developed algorithm is added as a custom automation algorithm class to the Video Labeler app.

Label Propagation Algorithm

Use VideoReader to load a video depicting a traffic scene and read the first frame. Then, display the first frame using imshow.

vidReader = VideoReader("visiontraffic.avi", CurrentTime=11);

firstFrame = readFrame(vidReader);

h = figureh =

Figure (1) with properties:

Number: 1

Name: ''

Color: [0.9400 0.9400 0.9400]

Position: [665 372 589 441]

Units: 'pixels'

Show all properties

imshow(firstFrame)

Load annotations for the first frame. This manual step is essential for defining the object of interest precisely, and is typically performed on the first frame in a video.

load("firstFrameAnnotation.mat")Display the labeled object in the first frame using a polygon ROI.

roi = drawpolygon(h.CurrentAxes,Position=maskVertices);

Warning: MATLAB has disabled some advanced graphics rendering features by switching to software OpenGL. For more information, click <a href="matlab:opengl('problems')">here</a>.

Estimate Flow Between Video Frames

Specify the optical flow estimation method as opticalFlowRAFT.

flowModel = opticalFlowRAFT;

Initialize the flow model using the first frame.

estimateFlow(flowModel,firstFrame);

Estimate the flow between the first two frames.

secondFrame = readFrame(vidReader); flow = estimateFlow(flowModel,secondFrame);

Plot the optical flow vectors on the first frame image.

figure imshow(firstFrame) hold on plot(flow, DecimationFactor=[10 5], ScaleFactor=1, color="g"); hold off

Propagate Labels to Next Frame

Create a binary mask image based on the provided polygon ROI annotation of the car. Pixels within the ROI are set to true (indicating presence of the object) and those outside are set to false (indicating absence of the object).

mask = createMask(roi); figure; imshow(mask)

Create a displacement field based on the estimated motion to warp the binary mask from the first frame into the second.

D = -cat(3,flow.Vx,flow.Vy); nextMask = imwarp(mask,D);

Display the two frames and their masks.

figure tiledlayout(1,2,TileSpacing="tight") nexttile imshow(insertObjectMask(firstFrame,mask)) title("First frame with manual mask") nexttile imshow(insertObjectMask(secondFrame,nextMask)) title("Second frame with propagated mask")

Refine Propagated Mask Using Image Morphology

Display the propagated ROI for the second frame.

figure; imshow(nextMask)

The automatically propagated mask in the second frame exhibits minor irregularities, particularly around the side mirrors, where the RAFT optical flow algorithm's estimation is not perfect due to the small size of these regions.

Apply image erosion to remove small disconnected components and regions of the road that may have gotten included inside the propagated ROI. Note, optical flow tracks all points in an image, and including background regions, like the road, inside the initial ROI can propagate and amplify errors.

processedMask = imerode(nextMask, strel("diamond",4));

Apply image dilation to slightly increase the mask border regions.

processedMask = imdilate(processedMask, strel("diamond",3));

Display the post-processed mask.

figure imshow(processedMask)

Propagate Labels to Subsequent Video Frames

In the previous section, optical flow was used to propagate ROI labels from the first to the second frame. This method can be extended to propagate the polygon ROI drawn on the first frame to multiple subsequent frames using optical flow.

Reset flowModel, set the VideoReader to the first annotated frame and calculate optical flow for the starting frame.

reset(flowModel); vidReader.CurrentTime = 11; frame = readFrame(vidReader); flow = estimateFlow(flowModel,frame);

Propagate the label to subsequent video frames using optical flow.

currentMask = mask; frameCount = 2; stopFrame = 12; figure; while frameCount <= stopFrame frame = readFrame(vidReader); flow = estimateFlow(flowModel,frame); D = -cat(3,flow.Vx,flow.Vy); nextMask = imwarp(currentMask,D); nextMask = imerode(nextMask, strel("diamond",4)); nextMask = imdilate(nextMask, strel("diamond",3)); overlayImage = insertObjectMask(frame,nextMask); imshow(overlayImage) currentMask = nextMask; frameCount = frameCount + 1; end

The automatically propagated labels exhibit minor irregularities even after the morphology-based refinement operations. A manual refinement stage can efficiently correct these imperfections, requiring significantly less effort than entirely relabeling the video frames from scratch. This can be easily achieved by integrating and running the RAFT-based label propagation method as an automation algorithm in the Video Labeler app.

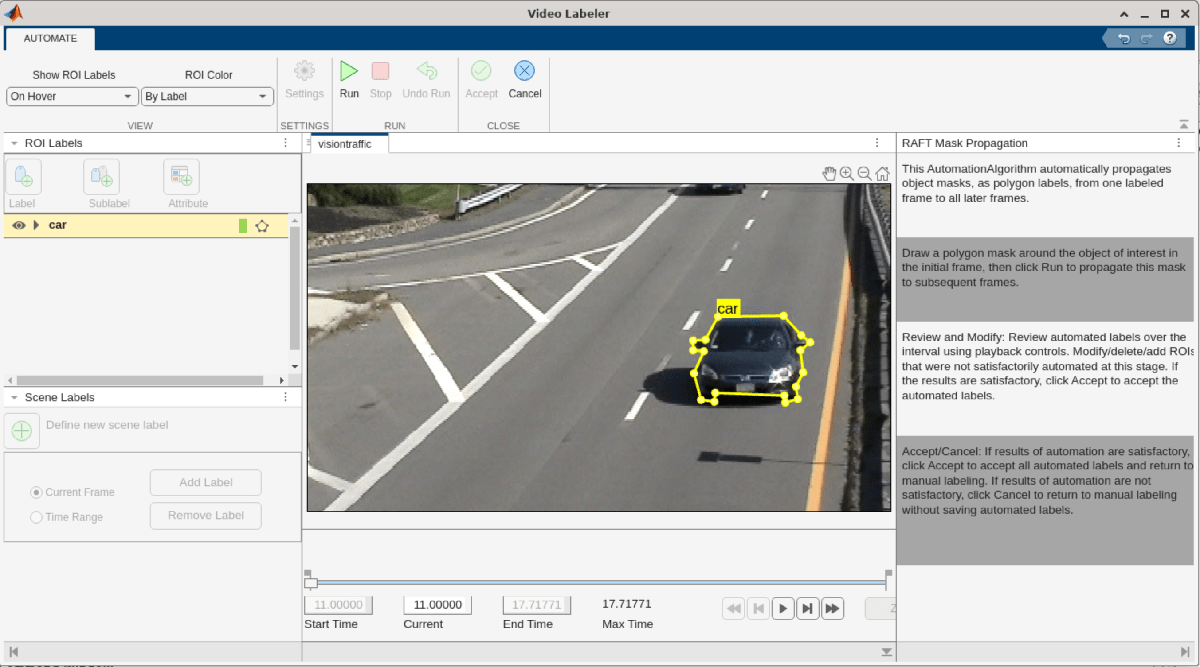

Integrate Label Propagation Algorithm into Video Labeler

Define Automation Algorithm

Incorporate the RAFT optical flow based mask propagation algorithm in the Video Labeler app by creating a temporal automation class in MATLAB. This base class defines the API that the app uses to configure and run the algorithm. The Video Labeler app provides a convenient way to obtain an initial automation class template. The RAFTAutomationAlgorithm class is based on this template and provides a ready-to-use automation class for pixel labeling in videos.

These are the key components of the automation algorithm class:

The

RAFTAutomationAlgorithmclass properties. Use the properties of theRAFTAutomationAlgorithmclass to create variables to hold the RAFT optical flow model and the object mask of the previous video frame.The

RAFTAutomationAlgorithmclass constructor. Use theRAFTAutomationAlgorithmclass constructor to initialize the optical flow model and load the initial mask for the starting video frame.The

runmethod. Use therunmethod to implement the core algorithm of this automation class. It is called for each video frame, and is expected to return pixel labels. Use the optical flow-based automatic mask propagation algorithm developed in the earlier sections of this example.

The properties and methods described above have been implemented in the RAFTAutomationAlgorithm.m, which is attached to this example as a supporting file. Inspect the source code for more details on implementing an automation algorithm class for the Video Labeler app. For more information, see Create Automation Algorithm for Labeling.

Import Automation Algorithm

Create a +vision/+labeler/ folder within the current working folder. Copy the automation algorithm class file RAFTAutomationAlgorithm.m to the folder +vision/+labeler.

automationFolder = fullfile("+vision","+labeler"); mkdir(automationFolder); copyfile("RAFTAutomationAlgorithm.m",automationFolder)

Start the Video Labeler app with a predefined polygon label definition for the "car" class. This creates only the label definition - the video frames themselves remain unlabeled.

gTruth = generateGroundTruth; videoLabeler(gTruth)

Import the automation algorithm into the Video Labeler app by performing these steps.

On the Label tab, select Select Algorithm.

Select Add Algorithm, and then select Import Algorithm.

Navigate to the

+vision/+labelerfolder in the current working folder and choose RAFTAutomationAlgorithm.mfrom the file selection dialog box.

Run Automation Algorithm

Go to the 11th second of the video by setting this value in the Current Time field below the video playback scrubber.

Reopen the Select Algorithm list, select the newly imported RAFT Mask Propagation algorithm, and then click Automate.

Draw a polygon ROI around the full visible car in this frame. Take care to not include the background road areas into your foreground polygon ROI for the car, as this can degrade the final results.

It is also possible to select an existing polygon ROI and begin automation. In this case, the existing ROI will be used as a seed point for the automation algorithm instead of the user having to draw the initial ROI.

Click Run. The automation algorithm executes on each video frame, starting from the frame at the 11th second, automatically propagating the initial manually drawn polygon ROI to the other frames. After the run is completed, use the slider or arrow keys to scroll through all the images and verify the result of the automation algorithm.

If the results are not satisfactory, click Undo Run. You can modify the post-processing steps in the run method of the automation algorithm class and re-run the automation to refine your results:

If the propagated ROI labels are getting significantly smaller than the actual object of interest in subsequent frames, decrease the size of the structuring element in

imerode, increase the size of the structuring element inimdilate, or try a combination of both.If the propagated ROI labels are getting significantly larger than the actual object of interest in subsequent frames, or tracking large areas of the background, increase the size of the structuring element in

imerode, decrease the size of the structuring element inimdilate, or try a combination of both.

You can perform further manual fine-tuning of the automated results to fix minor errors while reviewing them in the Video Labeler app. Once you are satisfied with the object labellings, click Accept to save and export the results of this labeling run.

Conclusion

This example demonstrated how to use the pre-trained RAFT deep optical flow model, and image morphology-based post-processing, to accelerate pixel labeling of object masks in the Video Labeler app using the AutomationAlgorithm interface. The model can be extended and fine-tuned to custom scenarios by modifying the automation algorithm class accordingly. For example, this class can be modified to propagate other ROI types, such as rectangles.

References

[1] Zachary Teed and Jia Deng. "RAFT: Recurrent All-Pairs Field Transforms Optical Flow". In Proceedings of the 16th IEEE/CVF European Conference on Computer Vision, 2020.

[2] Neelay Shah, Prajnan Goswami and Huaizu Jiang. "EzFlow: A modular PyTorch library for optical flow estimation using neural networks", Online 2021, https://github.com/neu-vi/ezflow.