Generate CUDA code for a Video Classification Network

This example shows how to generate CUDA® code for a deep learning network that classifies video and deploy the generated code onto the NVIDIA® Jetson™ Xavier board by using the MATLAB® Coder™ Support Package for NVIDIA Jetson and NVIDIA DRIVE® Platforms. The deep learning network has convolutional and bidirectional long short-term memory (BiLSTM) layers. The generated application reads the data from a specified video file as a sequence of video frames and outputs a label that classifies the activity in the video. This example generates code for the network trained in the example Classify Videos Using Deep Learning (Deep Learning Toolbox).

Third-Party and Hardware Requirements

NVIDIA Jetson board.

Ethernet crossover cable to connect the target board and host PC. Use this cord if you cannot connect the target board to a local network.

Supported Jetpack SDK that includes the CUDA and cuDNN libraries.

Environment variables on the target board for the compilers and libraries. For information on the supported versions of the compilers and libraries and their setup, see Prerequisites for Generating Code for NVIDIA Boards for NVIDIA boards.

Verify NVIDIA Support Package Installation on Host

To generate and deploy code to an NVIDIA Jetson Xavier board, you need the MATLAB Coder Support Package for NVIDIA Jetson and NVIDIA DRIVE Platforms. Use the checkHardwareSupportPackageInstall function to verify that the host system can run this example. If the function does not return an error, the support package is correctly installed.

checkHardwareSupportPackageInstall();

Connect to the NVIDIA Hardware

The support package uses an SSH connection over TCP/IP to execute commands while building and running the generated CUDA code on the Jetson platform. You must connect the target platform to the same network as the host computer or use an Ethernet crossover cable to connect the board directly to the host computer. Refer to the NVIDIA documentation on how to set up and configure your board.

To communicate with the NVIDIA hardware, you must create a live hardware connection object by using the jetson function. You must know the host name or IP address, username, and password of the target board to create a live hardware connection object. For example, when connecting to the target board for the first time, create a live object for Jetson hardware by using the command:

hwobj = jetson('jetson-name','ubuntu','ubuntu');

The jetson object reuses the settings from the most recent successful connection to the Jetson hardware. This example establishes an SSH connection to the Jetson hardware using the settings stored in memory.

hwobj = jetson;

### Checking for CUDA availability on the target... ### Checking for 'nvcc' in the target system path... ### Checking for cuDNN library availability on the target... ### Checking for TensorRT library availability on the target... ### Checking for prerequisite libraries is complete. ### Gathering hardware details... ### Checking for third-party library availability on the target... ### Found new version of the MATLAB I/O server 25.1.1. Updating... ### Launching the server ### Server launch is successful ### Gathering hardware details is complete. Board name : NVIDIA Jetson AGX Orin Developer Kit CUDA Version : 12.2 cuDNN Version : 8.9 TensorRT Version : 8.6 GStreamer Version : 1.20.3 V4L2 Version : 1.22.1-2build1 SDL Version : 1.2 OpenCV Version : 4.8.0 Available Webcams : Logitech Webcam C925e,Logitech Webcam C925e Available GPUs : Orin Available Digital Pins : 7 11 12 13 15 16 18 19 21 22 23 24 26 29 31 32 33 35 36 37 38 40

In case of a connection failure, a diagnostics error message appears in the MATLAB Command Window.. If the connection fails, the most likely cause is an incorrect IP address or hostname.

Verify GPU Environment

Use the coder.checkGpuInstall function to verify that the compilers and libraries necessary for running this example are set up correctly.

envCfg = coder.gpuEnvConfig('jetson'); envCfg.DeepLibTarget = 'cudnn'; envCfg.DeepCodegen = 1; envCfg.Quiet = 1; envCfg.HardwareObject = hwobj; coder.checkGpuInstall(envCfg);

The net_classify Entry-Point Function

The net_classify entry-point function hardcodes the name of a video file. You must change the hardcoded path to the location of the video file on your target hardware.

The entry-point function then reads the data from the file using a VideoReader object. The data is read into MATLAB as a sequence of images that represnt the video frames. The function then center-crops the data and passes it as an input to the trained network. The function uses the network trained in the Classify Videos Using Deep Learning (Deep Learning Toolbox) example. The function loads the dlnetwork object from the dlnet.mat file into a persistent variable and reuses the persistent object for subsequent prediction calls.

type('net_classify.m')function out = net_classify() %#codegen

% Copyright 2019-2024 The MathWorks, Inc.

if coder.target('MATLAB')

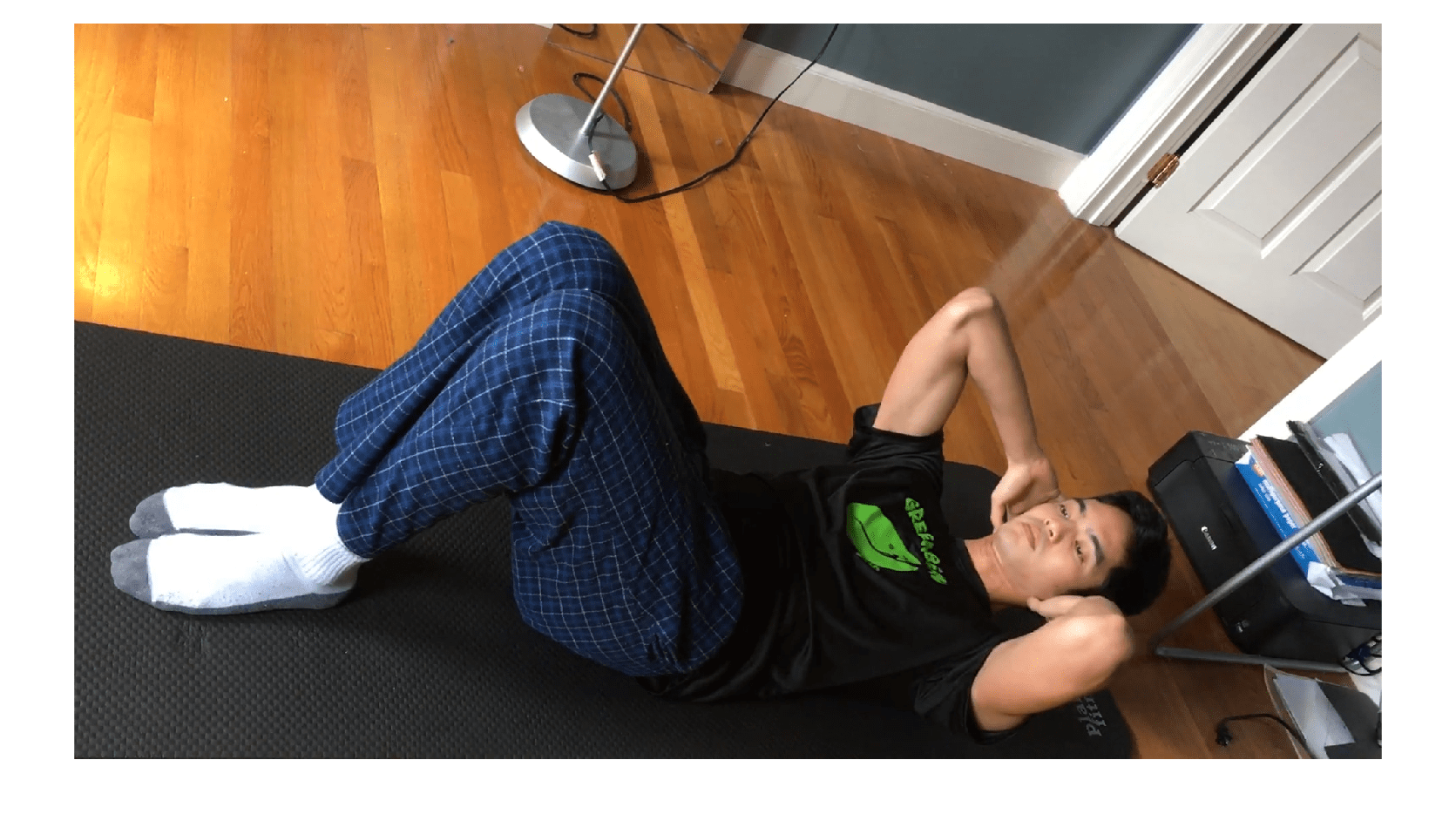

videoFilename = 'situp.mp4';

else

videoFilename = '/home/ubuntu/VideoClassify/situp.mp4';

end

frameSize = [1920 1080];

% read video

video = readVideo(videoFilename, frameSize);

% specify network input size

inputSize = [224 224 3];

% crop video

croppedVideo = centerCrop(video,inputSize);

% A persistent object dlnet is used to load the dlnetwork object. At the

% first call to this function, the persistent object is constructed and

% setup. When the function is called subsequent times, the same object is

% reused to call predict on inputs, thus avoiding reconstructing and

% reloading the network object. A categorial arrary labels is also loaded

% for classification.

persistent dlnet;

persistent labels;

if isempty(dlnet)

dlnet = coder.loadDeepLearningNetwork('dlnet.mat');

labels = coder.load('labels.mat');

end

% The dlnetwork object require dlarrays as inputs, wrap the cropped input

% in a dlarray

dlIn = dlarray(single(croppedVideo), 'SSCT');

% pass in cropped input to network

dlOut = predict(dlnet, dlIn);

scores = extractdata(dlOut);

classNames = labels.classNames;

% Convert the categorical label to a char array to simplify the application

% interface

label = cellstr(scores2label(scores,classNames,1));

out = label{1};

Examine the Pretrained dlnetwork Object

Examine the pretrained dlnetwork object by using the analyzeNetwork (Deep Learning Toolbox) fuction. The dlnetwork object:

1. Uses a sequence input layer to accept images sequences as input.

2. Uses convolutional layers to apply the convolutional operations to each video frame independently, and extract features from each frame.

3. Uses a flatten layer to restore the sequence structure and reshape the output to vector sequences.

4. Uses the BiLSTM layer followed by output layers to classify the vector sequences.

Download the video classification network.

downloadVideoClassificationNetwork();

Loop over the individual frames of situp.mp4 to view the test video in MATLAB.

videoFileName = 'situp.mp4'; video = readVideo(videoFileName); numFrames = size(video,4); figure for i = 1:numFrames frame = video(:,:,:,i); imshow(frame/255); drawnow end

Run net_classify in MATLAB

Run net_classify and note the output label. If there is a host GPU available, it fucntion uses it automatically.

net_classify()

ans = 'situp'

Generate and Deploy CUDA Code on the Target Device

To generate a CUDA executable that can be deployed to an NVIDIA target, create a GPU coder configuration object for generating an executable. Set the target deep learning library to cudnn.

clear cfg cfg = coder.gpuConfig('exe'); cfg.DeepLearningConfig = coder.DeepLearningConfig('cudnn');

Use the coder.hardware function to create a configuration object for the Jetson platform and assign it to the Hardware property of the GPU code configuration object cfg.

cfg.Hardware = coder.hardware('NVIDIA Jetson');Set the build directory on the target hardware. Change the path to a location on your target hardware where want to save the generated code.

cfg.Hardware.BuildDir = '/home/ubuntu/VideoClassify';The custom main file, main.cu, is a wrapper that calls the net_classify function in the generated library.

cfg.CustomInclude = '.'; cfg.CustomSource = fullfile('main.cu');

Run the codegen command. The function generates the code, copies it to the target board, then builds the executable on the target board.

codegen -config cfg net_classify

Code generation successful.

Run the Generated Application on the Target Device

Copy the test video file situp.mp4 from the host computer to the target device by using the putFile command. Ensure that this video file is in the location hardcoded in the entry-point function net_classify. In this example, this location is the target hardware build directory.

putFile(hwobj,videoFileName,cfg.Hardware.BuildDir);

Use runApplication to launch the application on the target hardware. The label displays in the output terminal on the target.

status = evalc("runApplication(hwobj,'net_classify')");See Also

Functions

coder.checkGpuInstall|codegen|coder.DeepLearningConfig|coder.loadDeepLearningNetwork|jetson|runApplication|killApplication|VideoReader

Objects

Topics

- Classify Videos Using Deep Learning (Deep Learning Toolbox)

- Getting Started with the MATLAB Coder Support Package for NVIDIA Jetson and NVIDIA DRIVE Platforms

- Long Short-Term Memory Neural Networks (Deep Learning Toolbox)

- Build and Run an Executable on NVIDIA Hardware

- Stop or Restart an Executable Running on NVIDIA Hardware

- Run Linux Commands on NVIDIA Hardware