yolov3ObjectDetectorMonoCamera

Detect objects in monocular camera using YOLO v3 deep learning detector

Since R2023a

Description

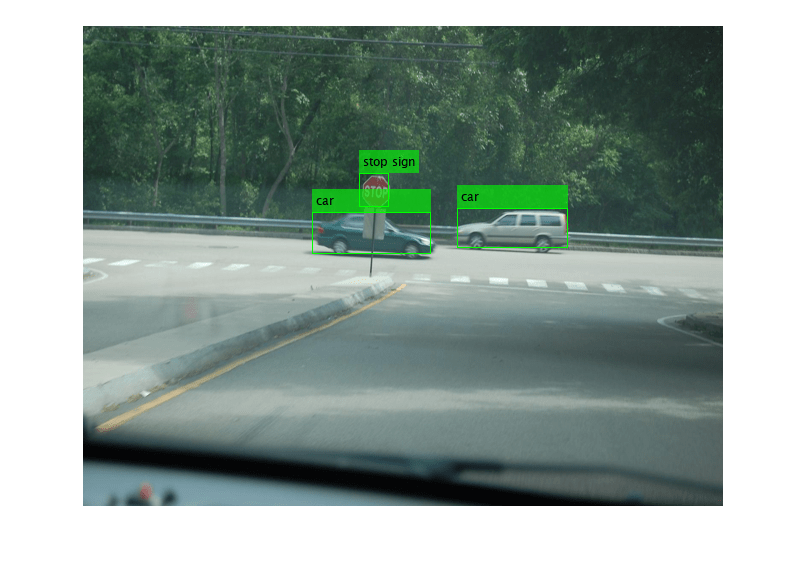

The yolov3ObjectDetectorMonoCamera object contains information about a you only look

once version 3 (YOLO v3) object detector that is configured for use with a monocular camera

sensor. To detect objects in an image captured by the camera, pass the detector to the

detect object

function.

When you use the detect object function with a

yolov3ObjectDetectorMonoCamera object, use of a CUDA®-enabled NVIDIA® GPU is highly recommended. The GPU reduces computation time significantly. Usage

of the GPU requires Parallel Computing Toolbox™. For information about the supported compute capabilities, see GPU Computing Requirements (Parallel Computing Toolbox).

Creation

Create a

yolov3ObjectDetectorobject by using YOLO v3 deep learning networks trained on a COCO data set (requires Deep Learning Toolbox™ and Computer Vision Toolbox™ Model for YOLO v3 Object Detection).detector = yolov3ObjectDetector("darknet53-coco");Alternatively, you can create a

yolov3ObjectDetectorobject by using a custom pretrained YOLO v3 network. For more information, seeyolov3ObjectDetector.Create a

monoCameraobject to model the monocular camera sensor.sensor = monoCamera(____);

Create a

yolov3ObjectDetectorMonoCameraobject by passing the detector and sensor as inputs to theconfigureDetectorMonoCamerafunction. The configured detector inherits property values from the original detector.configuredDetector = configureDetectorMonoCamera(detector,sensor,____);

Properties

Object Functions

detect | Detect objects using YOLO v3 object detector configured for monocular camera |

Examples

Version History

Introduced in R2023a