Wireless Resource Allocation Using Graph Neural Network

This example demonstrates the use of graph neural network (GNN) for power allocation in wireless networks. With the rise of wireless communication devices, efficient and dynamic resource allocation has become essential. Power allocation, in particular, is vital for optimizing network performance and ensuring reliable communication. GNN presents a promising solution for addressing complex optimization problems due to its ability to process graph-structured data and model interactions between network nodes.

The example covers the simulation of wireless networks, the mathematical modeling of received signals, and the constrained optimization problem related to power allocation. Additionally, the example discusses the advantageous properties of a GNN, such as stability and transferability, along with the methods for training and testing the neural network. The GNN architecture employed here is versatile and can be applied to various other domains, such as recommendation systems.

Wireless Network

Wireless networks consist of interconnected nodes that communicate without physical connections, enabling mobile and flexible communication. These networks are characterized by dynamic topologies, varying user densities, and fluctuating channel conditions, necessitating accurate simulations to evaluate network performance and resource allocation strategies effectively. Here are the fundamental components:

Network geometry: Describes the spatial configuration of the network, including the user density and the layout of transmitters (base stations or access points) and receivers (users or devices). The user density refers to the number of users or devices per unit area within the network. The geographical positions of transmitters (Tx) and receivers (Rx) within the network directly affects the path loss, signal strength, and quality of the communication link. The relative positioning also determines the likelihood and severity of interference from other transmitters.

Wireless Channel: The medium through which the wireless signal propagates from the transmitter to the receiver. The wireless channel is characterized by path loss (large scale fading) and small-scale fading. Channel characteristics play a critical role in the design of communication protocols and systems. Channel conditions dictate the achievable data rates, reliability, and overall network performance.

Wireless Channel and Received Signal

The channel between Tx and Rx is typically modeled using a combination of path loss and Rayleigh fading channels. Path loss represents the reduction in signal power as it propagates through space. The path loss can be modeled as , where is the distance between Tx and Rx, is the path loss at a reference distance , and is the path loss exponent that varies depending on the propagation environment (e.g., urban, suburban, indoor).

Rayleigh fading is a statistical model to describe how a transmitted signal changes as it propagates through an environment. The channel gain in a Rayleigh fading channel is modeled as a complex Gaussian random variable with zero mean and variance . This means the channel power follows an exponential distribution with mean .

The combined effect of path loss and Rayleigh fading between Tx and Rx can be modeled as , where is the channel gain due to path loss for the distance between Tx and Rx and is the Rayleigh fading component. The received signal power is then given by: where is the transmitted power.

Considering a network with transmitting and receiving pairs, let denote the transmit power of the th transmitter, denote the direct-link channel between the th transmitter and receiver, denote the cross-link channel between transmitter and receiver , denote the data symbol that the th transmitter sends to the th receiver, denote the data symbol that the th transmitter sends to the th receiver, and denote the additive Gaussian noise with variance . The received signal at receiver is given by . The signal-to-interference-plus-noise ratio (SINR) for the th receiver is given by

where is the channel power gain from the th transmitter to the th receiver due to path loss and Rayleigh fading.

Wireless Network Simulation

A wireless network can be simulated based on user density, the sizes of the coverage area, and the modeling of the channel between the Tx and Rx. In simulating a wireless network with Rayleigh fading channels, the positions of the Tx and the Rx are randomly drawn within the specified area, with a maximum allowable distance between them to ensure connectivity. This randomness in positioning, combined with the stochastic nature of Rayleigh fading (where channel powers are exponentially distributed), provides a realistic scenario for evaluating network performance under varying conditions.

The hwirelessNetworkGenerator object in this example is designed to simulate the wireless networks that we want to optimize. It focuses on the spatial distribution of transmitter-receiver (Tx-Rx) pairs and the wireless channel model. The table below encapsulates the simulation parameters used by this object.

Parameter | Description | Units |

|---|---|---|

| User density, specifying the average number of users per unit area. | users/m² |

| Length of the simulation area for the wireless network. | meters (m) |

| Width of the simulation area for the wireless network. | meters (m) |

| Maximum allowable distance between a transmitter (Tx) and its corresponding receiver (Rx). | meters (m) |

| Reference distance used in the path loss model, typically a short distance where path loss is known or can be accurately measured. | meters (m) |

| Path loss exponent, indicating how rapidly the signal power decays with distance. | unitless |

| Mean value of the fading energy in Rayleigh fading, representing the average rate of fading. | unitless |

| Noise variance, representing the power of the noise in the channel. | watts (W) |

When the hwirelessNetworkGenerator object is created, it initially sets up the wireless network based on the parameters provided at instantiation, such as user density (rho), network dimensions (Ln and Wn), maximum Tx-Rx distance (rmax), etc. This setup involves generating random positions for Tx-Rx pairs and computing the path loss (large scale fading) for each pair. The positions of Tx-Rx and path loss are stored in the properties TxRxPositions and LargeScaleFading, respectively.

rho = 0.05; Ln = 80; Wn = 40; rmax = 20; dr = 1; gamma = 2.2; lambda = 2; N0 = 1e-6; wng = hwirelessNetworkGenerator(rho,Ln,Wn,rmax,dr,gamma,lambda,N0);

When the generate function is called on the object, it triggers the simulation of small-scale fading effects, specifically Rayleigh fading, for each communication link in the network. The fading effect is applied to the channel on top of the path loss computed during the initial setup of the wireless network.

[S,H] = generate(wng);

The channel power matrix represents the channel gains between transmitters and receivers within the network. The normalized channel power matrix , which results from dividing by its eigenvalue with greatest real part, is crucial for analyzing network performance using graph neural network. The histogram shows the exponential distribution of the small-scale fading power.

wng.plotNetwork(H)

![Figure contains 3 axes objects. Axes object 1 with title Tx - Rx Positions, xlabel Area Length, ylabel Area Width contains 2 objects of type scatter. These objects represent Tx, Rx. Axes object 2 with xlabel Distance [m], ylabel Chan. Pow. contains 2 objects of type line. One or more of the lines displays its values using only markers These objects represent Path loss, Path loss + Rayleigh. Axes object 3 with title lambda blank = blank 2, xlabel Small-Scale Fading Power, ylabel PDF contains an object of type histogram.](../../examples/deeplearning_shared/win64/WirelessResourceAllocationUsingGraphNeuralNetworkExample_01.png)

Wireless Network as a Graph

A wireless network can be modeled as a directed graph where nodes represent communication entities like users, access points, and antennas, and edges represent communication or interference links between them. The adjacency matrix of the graph indicates which nodes are connected. This graph structure helps capture spatial relationships and dependencies between nodes, which is crucial for tasks like effective power allocation in networks with multiple users. This figure depicts a device-to-device network and its transceiver pairs.

Power Allocation

Power allocation in wireless networks is an optimization problem [1]. The objective is to maximize the sum rate, which represents the total data transmission rate across all users, subject to a power constraint that ensures the total transmit power does not exceed a specified limit. This optimization problem balances the need for high data rates with the practical limitations of power resources. The power control problem for sum rate maximization is formulated as:

where is the channel capacity given by

In this expression, represents the mapping of the interference network , whose th element is for , and the resource allocation into the instantaneous performance metric of channel .

Graph Neural Network (GNN)

GNNs have been recognized as a robust framework for capturing the dependencies within wireless networks [1], [2]. By learning from both the overarching structure of the network and the specific connection patterns between nodes, GNNs determine optimal power distribution strategies. Within this framework, the optimization graph signal, designated as , encapsulates the power outputs of the transmitters. The graph shift operator, , defined by the relation , is instrumental in defining the network's connectivity and the interactions between nodes, showcasing the influence of one node's power level on its neighboring nodes. Specifically, GNNs leverage channel graph representations, , to derive power allocations, , through a graph filter approach. Key characteristics of GNNs include:

Stability: GNNs are designed to be stable to small perturbations in the input graph, meaning that minor changes in the network's topology or user distribution result in negligible variations in the power allocation outcome [3].

Transference: GNNs trained on specific wireless network configurations can generalize and perform well on different configurations, demonstrating GNNs ability to adapt to various wireless network sizes, densities, and topology changes [3].

Create Wireless Network and Graph Neural Network

Simulate a wireless network with parameters in this table.

Parameter |

|

|

|

|

|

|

|

|

Value |

Based on the network geometry, the network contains transceiver pairs in the network, where represents the area of the wireless network. For this network, generate 200 realizations of the fading channel in single precision. If you set the logical sendToRemote to true, and your system has a GPU, the hwirelessNetworkGenerator object performs all the operations on the GPU machine.

sendToRemote =false; batchSize = 200; dataType = "single"; trainWNG = hwirelessNetworkGenerator( ... rho,Ln,Wn,rmax,dr,gamma,lambda,N0, ... BatchSize=batchSize, ... DataType=dataType, ... SendToRemote=sendToRemote); N = trainWNG.NumTxRx;

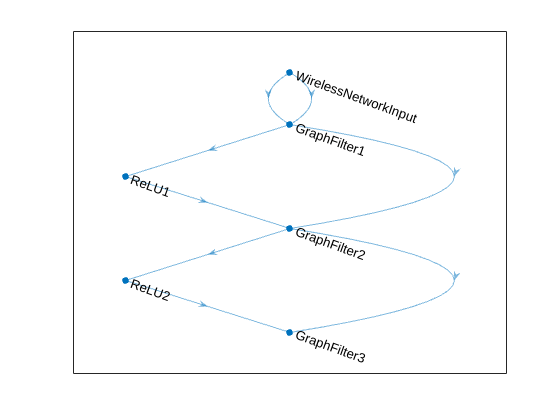

The hcreateWirelessGNN helper function in this example creates a Graph Neural Network (GNN) with a specific architecture designed for wireless network applications. The network architecture consists of an input layer and three graph perceptron layers, each with specific configurations.

Architecture Details

Input Layer: This layer accepts the initial power allocation and the graph shift operator . and represent the initial data about the wireless network nodes and their connections, respectively.

Three Graph Perceptrons: Each graph perceptron consists of a graph filter followed by a nonlinear ReLU (Rectified Linear Unit) activation function.

Graph Filter: A graph filter processes input features based on the graph structure and filter coefficients. Each graph filter has 5 filter taps, which means it applies a filter of length 5 to the input features. The th filter tap corresponds to the coefficient associated with the th power of the adjacency matrix, which aggregates information from nodes that are hops away. This hierarchical aggregation allows the GNN to effectively integrate multi-hop neighborhood information, enhancing its ability to model complex dependencies within the wireless network.

ReLU Activation: The ReLU layer applies a nonlinear transformation to the output of the graph filter, introducing non-linearity to the network.

The figure below shows the layout of the graph neural network.

Mathematical Representation of Graph Filter Output

The output of the graph filter at the th layer, , is given by

where:

is the set of filter tap coefficients for the th layer.

is the number of input features for the th layer.

is the number of output features for the th layer.

is the number of filter taps (in this case, 5) for the th layer.

is the graph shift operator (often the adjacency matrix or a normalized version of it).

is the th input feature at the th layer which is the th output feature at the th layer.

is the th output feature at the graph filter of the th layer.

The output of the ReLU nonlinear activation is . Group all filter coefficients in the filter tensor and all output features at the th layer in . Define the GNN operator as . By stacking these graph perceptrons, the GNN can learn complex patterns and relationships in the graph-structured wireless network data, enabling efficient resource allocation.

Graph Filter Configuration

The three graph perceptrons in the network are configured with different numbers of input and output features:

Layer | Number of Filter Taps (K) | Number of Input Features (G) | Number of Output Features (F) |

|---|---|---|---|

Layer 1 | 5 | 1 | 8 |

Layer 2 | 5 | 8 | 4 |

Layer 3 | 5 | 4 | 1 |

The hgraphFilterLayer object in this example implements graph filter operation and is used in the hcreateWirelessGNN helper function. To construct a graph filter layer, the object accepts the number of filter taps, number of input features, and number of output features. The predict function in hgraphFilterLayer performs the main filtering operation.

K = [5 5 5]; G = [1 8 4]; F = [8 4 1];

If you want to perform the training process instead of loading a pretrained network, set the trainNetwork to true. Initialize the graph neural network by passing the inputs, initial power allocation, and graph shift operator.

trainNetwork =false; if trainNetwork S0 = generate(trainWNG); S0 = dlarray(S0,"SSB"); p0 = dlarray( ... ones(1,trainWNG.NumTxRx,trainWNG.BatchSize,trainWNG.DataType), ... "SSB"); if trainWNG.SendToRemote p0 = gpuArray(p0); end net = hcreateWirelessGNN(K,G,F,N,batchSize,dataType,sendToRemote); net = initialize(net,p0,S0); if trainWNG.DataType == "double" net = dlupdate(@double,net); end else % Load the pre-trained network load("Model.mat","net") end

Illustrate the architecture of GNN. The diagram depicts the connections between the layers and their inputs.

figure plot(net)

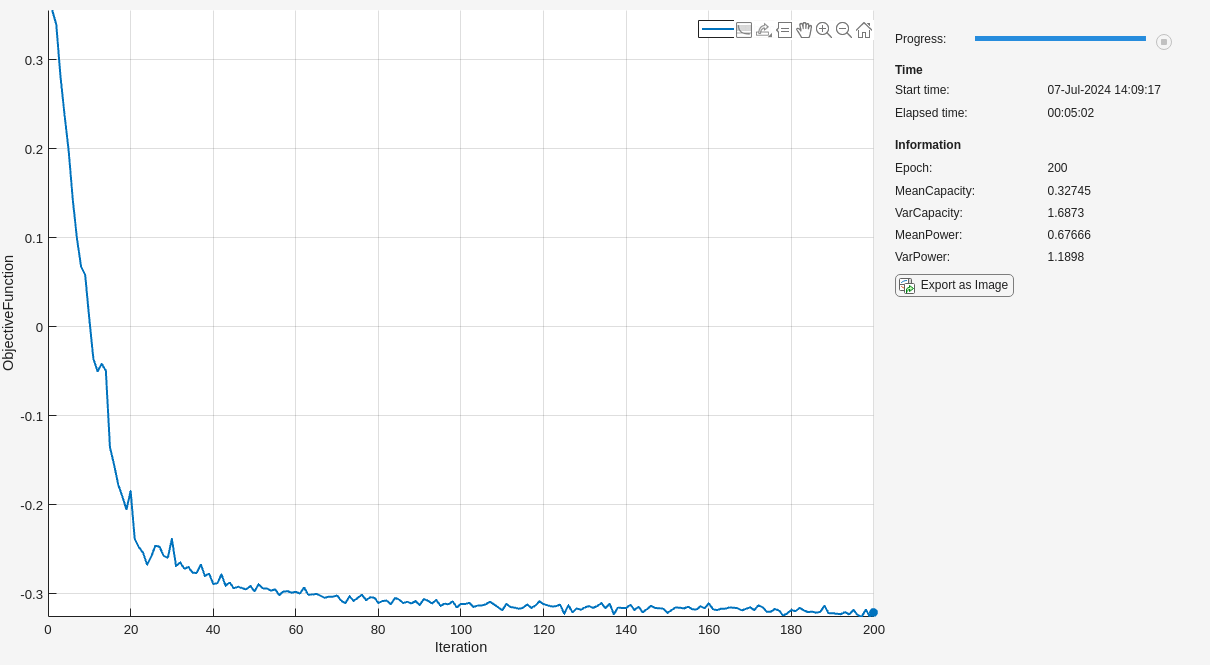

Learning Resource Allocation Policy

To determine the optimal power allocation using as inputs the class of functions that can be represented by the GNNs, sole this optimization problem for the graph filter coefficients:

The filter coefficients in are learned relative to the statistics of the interference graph . To learn these coefficients, an equivalent nonconstrained version of the optimization problem is formulated as

where is the importance weight associated with the constraints in the original optimization problem. This optimization problem is implemented in the objectiveFunction. The filter coefficients are learned using 40,000 observations which are fed to the network in 200 iterations in batches of size 200. To learn the filter coefficients, use the Adam update with step size 0.02.

mu_model = 0.01; if trainNetwork % Custom train loop monitor = trainingProgressMonitor( ... Metrics="ObjectiveFunction", ... Info=["Epoch" "MeanCapacity" ... "VarCapacity" "MeanPower" ... "VarPower"], ... XLabel="Iteration"); %#ok<*UNRCH> trainNumEpochs = 200; % Adam stepSize = 0.02; avgGrad = []; avgSqGrad = []; % Loop over epochs epoch = 0; while epoch < trainNumEpochs && ~monitor.Stop epoch = epoch + 1; % Forward data through network to get the corresponding allocation % strategy, compute the capacity as the performance under this % allocation strategy, and calculate the objective function and % gradients using the hobjectiveFunction and dlfeval, respectively [objFunc,gradients,info] = dlfeval(@hobjectiveFunction, ... net,trainWNG,mu_model); % Update the network parameters using the Adam update [net,avgGrad,avgSqGrad] = adamupdate(net,gradients, ... avgGrad,avgSqGrad,epoch,stepSize); % Update the training progress monitor recordMetrics(monitor,epoch,ObjectiveFunction=objFunc); updateInfo(monitor, ... Epoch=epoch, ... MeanPower=info.meanPower, ... VarPower=info.varPower, ... MeanCapacity=info.meanCapacity, ... VarCapacity=info.varCapacity); monitor.Progress = 100*epoch/trainNumEpochs; end end

Test

Test the trained network on 20,000 new fading channel observations leaving the locations of Tx and Rx unchanged.

testNumEpochs = 100; testInfo = htest(net,trainWNG,mu_model,testNumEpochs);

Testing Results (N = 160,Generate Random Tx-Rx Locations = 0): Loss mean: -0.3218, variance 0.0000 | Power mean: 0.6770, variance 0.0001 | Capacity mean: 0.3285, variance 0.0000

Stability

Stability in the context of GNNs refers to the robustness of the model against small perturbations or changes in the input graph structure or node features. In wireless networks, this property is crucial because the network topology and channel conditions can vary due to user mobility, environmental changes, and other dynamic factors. Stability ensures that small changes in user positions or channel conditions do not lead to significant deviations in the GNN output, maintaining reliable performance. Stability provides consistent resource allocation decisions, which is vital for maintaining quality of service in wireless communications.

To evaluate the trained network's stability, apply minor modifications to the input graph, including small adjustments to the positions of nodes, changes in channel gains, or variations in interference levels. Assess the impact of these perturbations on the outputs of the GNN, such as the power allocation choices and the sum rate, verifying that the changes stay within permissible boundaries. Examine the trained network against 100 new instances of the network, generating a new set of Tx/Rx locations for each instance and producing 200 instances of small-scale fading. To generate a new instance of the network each time, set the argument Randomize to true.

testStabilityWNG = hwirelessNetworkGenerator(rho,Ln,Wn,rmax,dr,gamma,lambda,N0, ... BatchSize=batchSize, ... DataType=dataType, ... Randomize=true); testStabilityInfo = htest(net,testStabilityWNG,mu_model,testNumEpochs);

Testing Results (N = 160,Generate Random Tx-Rx Locations = 1): Loss mean: -0.3033, variance 0.0093 | Power mean: 0.9848, variance 6.7151 | Capacity mean: 0.3131, variance 0.0079

Transference

Transference refers to the ability of a GNN to generalize learned patterns from the training set to new, unseen graphs. This property is essential for applying a trained GNN model to different wireless network scenarios without requiring retraining. The transference property allows the GNN to be applied to various network configurations, making it versatile and efficient for real-world applications. Transference enhances the scalability of the model, as it can handle different network sizes and densities effectively.

Evaluate the model transference capability through the construction of a new wireless network configuration. To verify how proficient the model is in adapting to diverse network setups, initiate a distinct network structure. Retain the original user density, reference distance, path loss exponent, and the mean power of small-scale fading established during the training phase. Modify the spatial dimensions of the network to encompass a length of 120 meters and a width of 60 meters. Set the maximum permissible distance between any transmitter-receiver pair to 30 meters. This network geometry yields a total of transceiver pairs.

testTransferenceWNG = hwirelessNetworkGenerator( ... rho,120,60,30,dr,gamma,lambda,N0, ... BatchSize=batchSize, ... DataType=dataType, ... Randomize=true); testTransferenceInfo = htest(net,testTransferenceWNG, ... mu_model,testNumEpochs);

Testing Results (N = 360,Generate Random Tx-Rx Locations = 1): Loss mean: -0.1841, variance 0.0019 | Power mean: 0.6231, variance 0.0001 | Capacity mean: 0.1903, variance 0.0019

Conclusion

This example highlights the ability of GNNs to address optimization problems in the realm of power allocation within wireless networks. GNNs excel in this area due to their ability to interpret and utilize the intricate data structures of wireless networks, optimizing network performance through strategic power distribution. Two critical features, stability and transference, stand out as key enablers of GNN effectiveness. Stability ensures GNNs can handle minor network variations without loss in performance, while transference allows these models to apply learned strategies across different network configurations efficiently. This adaptability and robustness position GNNs as a valuable tool for enhancing wireless network design and management, promising significant improvements in throughput, interference reduction, and resource allocation.

References

[1] Eisen, Mark, and Alejandro Ribeiro. “Optimal Wireless Resource Allocation With Random Edge Graph Neural Networks.” IEEE Transactions on Signal Processing 68 (2020): 2977–91. https://doi.org/10.1109/TSP.2020.2988255.

[2] Shen, Yifei, Yuanming Shi, Jun Zhang, and Khaled B. Letaief. “Graph Neural Networks for Scalable Radio Resource Management: Architecture Design and Theoretical Analysis.” IEEE Journal on Selected Areas in Communications 39, no. 1 (January 2021): 101–15. https://doi.org/10.1109/JSAC.2020.3036965.

[3] Ruiz, Luana, Fernando Gama, and Alejandro Ribeiro. “Graph Neural Networks: Architectures, Stability, and Transferability.” Proceedings of the IEEE 109, no. 5 (May 2021): 660–82. https://doi.org/10.1109/JPROC.2021.3055400.

Appendix

The functions listed in this section are only for use in this example. They may change or be removed in a future release.

hcreateWirelessGNN

The hcreateWirelessGNN function builds a Graph Neural Network (GNN) for wireless communications, incorporating parameters like filter taps K, input G and output features per layer F, graph nodes N, and batch size B. Tailored for power signal processing on graphs, this architecture offers a versatile approach to solving power allocation challenges in wireless networks.

function net = hcreateWirelessGNN(K,G,F,N,B,dataType,sendToRemote) %#ok<*DEFNU> % Graph filter and ReLU layers GFL = cell(length(K),1); RLU = cell(length(K)-1,1); for i = 1:length(K) gfl = hgraphFilterLayer(K(i),G(i),F(i), ... DataType=dataType, ... SendToRemote=sendToRemote, ... Name="GraphFilter"+i); GFL{i} = gfl; if i < length(K) RLU{i} = reluLayer(Name="ReLU"+i); end end % Create DL network wnil = hwirelessNetworkInputLayer([1 N B], ... Name="WirelessNetworkInput"); net = dlnetwork(wnil,Initialize=false); % Add and connect graph filter layers and ReLU layers for i = 1:length(K) if i == 1 % First layer net = addLayers(net,GFL{i}); net = connectLayers(net, ... "WirelessNetworkInput/X", ... sprintf("GraphFilter%d/X",i)); net = connectLayers(net, ... "WirelessNetworkInput/S", ... sprintf("GraphFilter%d/S",i)); net = addLayers(net,RLU{i}); net = connectLayers(net, ... sprintf("GraphFilter%d/X",i), ... sprintf("ReLU%d",i)); elseif i == length(K) % Last layer net = addLayers(net,GFL{i}); net = connectLayers(net, ... sprintf("ReLU%d",i-1), ... sprintf("GraphFilter%d/X",i)); net = connectLayers(net, ... sprintf("GraphFilter%d/S",i-1), ... sprintf("GraphFilter%d/S",i)); else net = addLayers(net,GFL{i}); net = connectLayers(net, ... sprintf("ReLU%d",i-1), ... sprintf("GraphFilter%d/X",i)); net = connectLayers(net, ... sprintf("GraphFilter%d/S",i-1), ... sprintf("GraphFilter%d/S",i)); net = addLayers(net,RLU{i}); net = connectLayers(net, ... sprintf("GraphFilter%d/X",i), ... sprintf("ReLU%d",i)); end end end

hobjectiveFunction

The hobjectiveFunction is designed to evaluate the performance of a power allocation strategy generated by a neural network within the context of wireless communication. This function operates by first forwarding input data through the network to obtain the proposed allocation strategy. It then computes the network capacity, which serves as a measure of performance under the given allocation strategy. Finally, the function calculates the value of the objective function, which likely reflects the efficiency or effectiveness of the power allocation in maximizing network capacity, along with the gradients necessary for optimizing the network parameters. This process enables the iterative improvement of the allocation strategy towards optimal network performance.

function [objFunc,gradients,info] = hobjectiveFunction(net,wng,mu_model) % Forward data through network to get the corresponding allocation % strategy, compute the capacity as the performance under this allocation % strategy, and calculate the negative of objective function and gradients % For each iteration, generate a new batch of random channel matrix [S,H] = generate(wng); % Cast to a formatted dlarray S = dlarray(S,"SSB"); % Forward data through network to get the corresponding allocation % strategy and compute the capacity as the performance under this % allocation strategy p0 = dlarray(ones(1,wng.NumTxRx,wng.BatchSize,wng.DataType),"SSB"); if wng.SendToRemote p0 = gpuArray(p0); end p = forward(net,p0,S); p = abs(p); c = wng.computeChannelCapacity(H,p); % Calculate negative of objective function objFunc = -mean(c,"all")+mu_model*mean(p,"all"); info.meanCapacity = mean(extractdata(c),"all"); info.varCapacity = var(extractdata(c),[],"all"); info.meanPower = mean(extractdata(p),"all"); info.varPower = var(extractdata(p),[],"all"); % Calculate gradients of loss with respect to learnable parameters gradients = dlgradient(objFunc,net.Learnables); end

htest

The htest function is designed to evaluate the performance of a trained GNN on a set of new observations from a wireless network. This function operates by inputting unseen data, representing different states or configurations of the wireless network, into the trained GNN model. The primary goal is to assess how well the model generalizes its learned power allocation strategies to new, possibly unseen scenarios beyond the training dataset. Upon execution, the htest function forward the new observations through the trained GNN to predict the optimal power allocation for each scenario.

function info = htest(net,wng,mu,testNumEpochs) %HTEST Evaluates a trained GNN on new wireless network observations info.meanLoss = zeros(testNumEpochs,1,wng.DataType); info.powers = zeros(testNumEpochs,wng.NumTxRx,wng.BatchSize,wng.DataType); info.meanPower = zeros(testNumEpochs,1,wng.DataType); info.capacities = zeros(testNumEpochs,wng.NumTxRx,wng.BatchSize,wng.DataType); info.meanCapacity = zeros(testNumEpochs,1,wng.DataType); for epoch = 1:testNumEpochs % For each iteration, generate a new random channel matrix [S,H] = generate(wng); S = dlarray(S,"SSB"); % Get the corresponding allocation strategy p0 = dlarray(ones(1,wng.NumTxRx,wng.BatchSize,wng.DataType),"SSB"); if wng.SendToRemote p0 = gpuArray(p0); end p = predict(net,p0,S); p = abs(p); % Calculate the capacity as the performance under this allocation % strategy c = wng.computeChannelCapacity(H,p); % Cache powers, capacities, and losses ep = extractdata(p); info.powers(epoch,:,:) = ep; info.meanPower(epoch) = mean(ep,"all"); ec = extractdata(c); info.capacities(epoch,:,:) = ec; info.meanCapacity(epoch) = mean(ec,"all"); info.meanLoss(epoch) = -info.meanCapacity(epoch)+mu*info.meanPower(epoch); end fprintf("Testing Results (N = %d,Generate Random Tx-Rx Locations = %d):\n",wng.NumTxRx,wng.Randomize) fprintf("\tLoss mean: %.4f, variance %.4f"+ ... " | Power mean: %.4f, variance %.4f"+ ... " | Capacity mean: %.4f, variance %.4f\n", ... mean(info.meanLoss),var(info.meanLoss), ... mean(info.meanPower),var(info.meanPower), ... mean(info.meanCapacity),var(info.meanCapacity)); end

Copyright 2024, The MathWorks, Inc.

See Also

Functions

Topics

- Graph Signal Processing and Brain Signal Analysis (Signal Processing Toolbox)