targetPoses

Target positions and orientations relative to host vehicle

Description

Examples

In a simple driving scenario, obtain the poses of target vehicles in the coordinate system of the ego vehicle. Then convert these poses back to the world coordinates of the driving scenario.

Create a driving scenario.

scenario = drivingScenario;

Create target actors.

actor(scenario,'ClassID',1, ... 'Position',[10 20 30], ... 'Velocity',[12 113 14], ... 'Yaw',54, ... 'Pitch',25, ... 'Roll',22, ... 'AngularVelocity',[24 42 27]); actor(scenario,'ClassID',1, ... 'Position',[17 22 12], ... 'Velocity',[19 13 15], ... 'Yaw',45, ... 'Pitch',52, ... 'Roll',2, ... 'AngularVelocity',[42 24 29]);

Add an ego vehicle actor.

egoActor = actor(scenario,'ClassID',1, ... 'Position',[1 2 3], ... 'Velocity',[1.2 1.3 1.4], ... 'Yaw',4, ... 'Pitch',5, ... 'Roll',2, ... 'AngularVelocity',[4 2 7]);

Use the actorPoses function to return the poses of all actors in the scenario. Pose properties (position, velocity, and orientation) are in the world coordinates of the driving scenario. Save the target actors to a separate variable and inspect the pose of the first target actor.

allPoses = actorPoses(scenario); targetPosesScenarioCoords = allPoses(1:2); targetPosesScenarioCoords(1)

ans = struct with fields:

ActorID: 1

Position: [10 20 30]

Velocity: [12 113 14]

Roll: 22

Pitch: 25

Yaw: 54

AngularVelocity: [24 42 27]

Use the driving.scenario.targetsToEgo function to convert the target poses to the ego-centric coordinates of the ego actor. Inspect the pose of the first actor.

targetPosesEgoCoords = driving.scenario.targetsToEgo(targetPosesScenarioCoords,egoActor); targetPosesEgoCoords(1)

ans = struct with fields:

ActorID: 1

Position: [7.8415 18.2876 27.1675]

Velocity: [18.6826 112.0403 9.2960]

Roll: 16.4327

Pitch: 23.2186

Yaw: 47.8114

AngularVelocity: [-3.3744 47.3021 18.2569]

Alternatively, use the targetPoses function to obtain all target actor poses in ego vehicle coordinates. Display the first target pose, which matches the previously calculated pose.

targetPosesEgoCoords = targetPoses(egoActor); targetPosesEgoCoords(1)

ans = struct with fields:

ActorID: 1

ClassID: 1

Position: [7.8415 18.2876 27.1675]

Velocity: [18.6826 112.0403 9.2960]

Roll: 16.4327

Pitch: 23.2186

Yaw: 47.8114

AngularVelocity: [-3.3744 47.3021 18.2569]

Use the driving.scenario.targetsToScenario to convert the target poses back to the world coordinates of the scenario. Display the first target pose, which matches the original target pose.

targetPosesScenarioCoords = driving.scenario.targetsToScenario(targetPosesEgoCoords,egoActor); targetPosesScenarioCoords(1)

ans = struct with fields:

ActorID: 1

ClassID: 1

Position: [10.0000 20.0000 30.0000]

Velocity: [12.0000 113.0000 14.0000]

Roll: 22

Pitch: 25.0000

Yaw: 54

AngularVelocity: [24.0000 42.0000 27.0000]

Obtain the poses of targets that are within the maximum range of a sensor mounted to the ego vehicle.

Create a driving scenario. The scenario contains a 75-meter straight road, an ego vehicle, and two target vehicles.

The nearest target vehicle is 45 meters away and in the same lane as the ego vehicle.

The farthest target vehicle is 65 meters away and in the opposite lane of the ego vehicle.

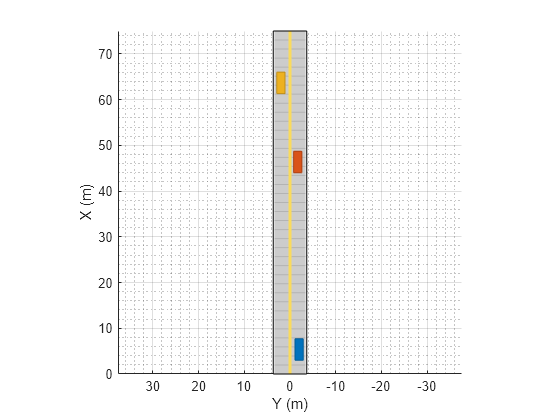

Plot the driving scenario.

scenario = drivingScenario; roadCenters = [0 0 0; 75 0 0]; laneSpecification = lanespec([1 1]); road(scenario,roadCenters,'Lanes',laneSpecification,'Name','Road'); egoVehicle = vehicle(scenario, ... 'ClassID',1, ... 'Position',[4 -2 0], ... 'Name','Ego'); vehicle(scenario, ... 'ClassID',1, ... 'Position',[45 -1.7 0], ... 'Name','Near Target'); vehicle(scenario, ... 'ClassID',1, ... 'Position',[65 2 0], ... 'Yaw',-180, ... 'Name','Far Target'); plot(scenario)

Create a vision sensor mounted to the front bumper of the ego vehicle. Configure the sensor to have a maximum detection range of 50 meters.

sensor = visionDetectionGenerator('SensorIndex',1, ... 'SensorLocation',[3.7 0], ... 'MaxRange',50);

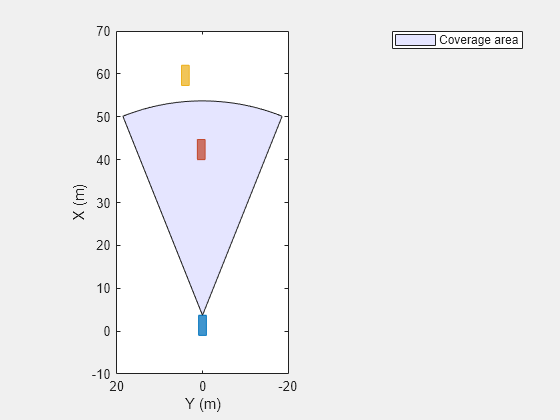

Plot the outlines of the vehicles and the coverage area of the sensor. The nearest target vehicle is within range of the sensor but the farthest target vehicle is not.

bep = birdsEyePlot; olPlotter = outlinePlotter(bep); [position,yaw,length,width,originOffset,color] = targetOutlines(egoVehicle); plotOutline(olPlotter,position,yaw,length,width, ... 'OriginOffset',originOffset,'Color',color) caPlotter = coverageAreaPlotter(bep,'DisplayName','Coverage area','FaceColor','blue'); mountPosition = sensor.SensorLocation; range = sensor.MaxRange; orientation = sensor.Yaw; fieldOfView = sensor.FieldOfView(1); plotCoverageArea(caPlotter,mountPosition,range,orientation,fieldOfView);

Obtain the poses of targets that are within the range of the sensor. The output structure contains the pose of only the nearest target, which is under 50 meters away from the ego vehicle.

poses = targetPoses(egoVehicle,range)

poses = struct with fields:

ActorID: 2

ClassID: 1

Position: [41 0.3000 0]

Velocity: [0 0 0]

Roll: 0

Pitch: 0

Yaw: 0

AngularVelocity: [0 0 0]

In this example, you will add sensors to a driving scenario using the addSensors function and get ground-truth target poses based on the individual sensor inputs. Then, you process the ground-truth target poses into detections and visualize them.

Set Up Driving Scenario and Bird's-Eye-Plot

Create a driving scenario with an ego vehicle and two target vehicles. One target vehicle is in the front and the other is to the left of the ego-vehicle.

[scenario, egovehicle] = helperCreateDrivingScenario;

Configure a vision sensor to be mounted at the front of the ego vehicle.

visionSensor = visionDetectionGenerator(SensorIndex=1,SensorLocation=[4.0 0],Height=1.1,Pitch=1.0,DetectorOutput="Objects only");Configure an ultrasonic sensor to be mounted at the left side of the ego-vehicle.

leftUltrasonic = ultrasonicDetectionGenerator(SensorIndex=2,MountingLocation=[0.5 1 0.2],MountingAngles=[90 0 0],FieldOfView=[70 35],UpdateRate=100);

Create a bird's-eye-plot to visualize the driving scenario.

[detPlotter,lmPlotter,olPlotter,lulrdPlotter,luldetPlotter,bepAxes] = helperCreateBEP(visionSensor,leftUltrasonic);

Add Sensors and Simulate Driving Scenario

Add both vision and ultrasonic sensors to the driving scenario using the addSensors function. You can add sensors to any vehicle in the driving scenario using the addSensors function by specifying the actor ID of the desired vehicle. In this example, specify the ego-vehicle actor ID.

addSensors(scenario,{visionSensor,leftUltrasonic},egovehicle.ActorID);Simulate the driving scenario. Note that you get separate target poses based on individual sensors by specifying their respective sensor IDs as inputs to the targetPoses function. This syntax returns the ground-truth poses of targets only within the range of the specified sensor. You then pass the ground-truth poses to their respective sensor models to generate detections and visualize them.

legend(bepAxes,'show') lookaheadDistance = 0:0.5:60; while advance(scenario) lb = laneBoundaries(egovehicle,'XDistance',lookaheadDistance,'LocationType','inner'); [lmv,lmf] = laneMarkingVertices(egovehicle); % Get ground-truth poses of targets in the range of vision and ultrasonic sensors separately tgtposeVision = targetPoses(scenario,visionSensor.SensorIndex); tgtposeUltrasonic = targetPoses(scenario,leftUltrasonic.SensorIndex); % Obtain detections based on targets only in range [obdets,obValid] = visionSensor(tgtposeVision,scenario.SimulationTime); [lobdets,lobValid] = leftUltrasonic(tgtposeUltrasonic,scenario.SimulationTime); helperPlotBEPVision(egovehicle,lmv,lmf,obdets,obValid,detPlotter,lmPlotter,olPlotter) helperPlotBEPUltrasonic(lobdets,lobValid,leftUltrasonic,lulrdPlotter,luldetPlotter) end

Helper Functions

helperCreateDrivingScenario creates a driving scenario by specifying the road and vehicle properties.

function [scenario, egovehicle] = helperCreateDrivingScenario scenario = drivingScenario; roadCenters = [-120 30 0;-60 0 0;0 0 0; 60 0 0; 120 30 0; 180 60 0]; lspc = lanespec(3); road(scenario,roadCenters,Lanes=lspc); % Create an ego vehicle that travels in the center lane at a velocity of 30 m/s. egovehicle = vehicle(scenario,ClassID=1); egopath = [1.5 0 0; 60 0 0; 111 25 0]; egospeed = 30; smoothTrajectory(egovehicle,egopath,egospeed); % Add a target vehicle that travels ahead of the ego vehicle at 30.5 m/s in the right lane, and changes lanes close to the ego vehicle. ftargetcar = vehicle(scenario,ClassID=1); ftargetpath = [8 2; 60 -3.2; 120 33]; ftargetspeed = 40; smoothTrajectory(ftargetcar,ftargetpath,ftargetspeed); % Add a second target vehicle that travels in the left lane at 32m/s. ltargetcar = vehicle(scenario,ClassID=1); ltargetpath = [-5.0 3.5 0; 60 3.5 0; 111 28.5 0]; ltargetspeed = 30; smoothTrajectory(ltargetcar,ltargetpath,ltargetspeed); end

helperCreateBEP creates a bird's-eye-plot for visualing the driving scenario simulation.

function [detPlotter, lmPlotter, olPlotter, lulrdPlotter,luldetPlotter,bepAxes] = helperCreateBEP(visionSensor,leftUltrasonic) figureName = "BEP"; Figure = findobj('Type','Figure',Name=figureName); if isempty(Figure) screenSize = double(get(groot,'ScreenSize')); Figure = figure(Name=figureName); Figure.Position = [screenSize(3)*0.17 screenSize(4)*0.15 screenSize(3)*0.4 screenSize(4)*0.6]; Figure.NumberTitle = 'off'; Figure.MenuBar = 'none'; Figure.ToolBar = 'none'; end clf(Figure); bepAxes = axes(Figure); grid(bepAxes,'on'); legend(bepAxes,'hide'); bep = birdsEyePlot(Parent=bepAxes,XLim=[-20 60],YLim=[-35 35]); caPlotterV = coverageAreaPlotter(bep,DisplayName="Vision Coverage area",FaceColor="b"); caPlotterU = coverageAreaPlotter(bep,DisplayName="Ultrasonic Coverage area",FaceColor="m"); detPlotter = detectionPlotter(bep,DisplayName="Object detections"); lmPlotter = laneMarkingPlotter(bep,DisplayName="Lane markings"); olPlotter = outlinePlotter(bep); plotCoverageArea(caPlotterV,visionSensor.SensorLocation,... visionSensor.MaxRange,visionSensor.Yaw, ... visionSensor.FieldOfView(1)); plotCoverageArea(caPlotterU,leftUltrasonic.MountingLocation(1:2),... leftUltrasonic.DetectionRange(3),leftUltrasonic.MountingAngles(1), ... leftUltrasonic.FieldOfView(1)); lulrdPlotter = rangeDetectionPlotter(bep,DisplayName="Left Ultrasonic Ranges",LineStyle="-"); luldetPlotter = detectionPlotter(bep,DisplayName="Point-On-Target (Left Ultrasonic)",MarkerFaceColor="k"); end

helperPlotBEPVision plots ego vehicle outlines, lanes and vision detections on the bird's-eye-plot.

function helperPlotBEPVision(egovehicle,lmv,lmf,obdets,obValid,detPlotter, lmPlotter, olPlotter) plotLaneMarking(lmPlotter,lmv,lmf) [objposition,objyaw,objlength,objwidth,objoriginOffset,color] = targetOutlines(egovehicle); plotOutline(olPlotter,objposition,objyaw,objlength,objwidth, ... OriginOffset=objoriginOffset,Color=color) if obValid detPos = cellfun(@(d)d.Measurement(1:2),obdets,UniformOutput=false); detPos = vertcat(zeros(0,2),cell2mat(detPos')'); plotDetection(detPlotter,detPos) end end

helperPlotBEPUltrasonic plots ultrasonic range measurements and points on targets.

function helperPlotBEPUltrasonic(lobdets,lobValid,leftUltrasonic,lulrdPlotter,luldetPlotter) if ~isempty(lobdets) && lobValid lranges = lobdets{1}.Measurement; plotRangeDetection(lulrdPlotter,lranges,leftUltrasonic.FieldOfView(1),leftUltrasonic.MountingLocation,leftUltrasonic.MountingAngles) plotDetection(luldetPlotter,lobdets{1}.ObjectAttributes{1}.PointOnTarget(1:2)') end end

Input Arguments

Obtain target poses based on ego-vehicle actor

Actor belonging to a drivingScenario object, specified as an

Actor or Vehicle object. To create these objects, use the

actor and vehicle functions, respectively.

Circular range around the ego vehicle actor, specified as a nonnegative

real scalar. The targetPoses function returns only the

poses of targets that lie within this range. Units are in meters.

Obtain target poses based on sensor range

Driving scenario, specified as a drivingScenario object.

Unique sensor identifier, specified as a positive integer. This property distinguishes sensors in a multisensor system.

Example: 5

Data Types: double

Output Arguments

Target poses, in ego vehicle coordinates, returned as a structure or as an

array of structures. The pose of the ego vehicle actor,

ac, is not included.

A target pose defines the position, velocity, and orientation of a target in ego vehicle coordinates. Target poses also include the rates of change in actor position and orientation.

Each pose structure has these fields.

| Field | Description |

|---|---|

ActorID | Scenario-defined actor identifier, specified as a positive integer. |

ClassID | Classification identifier, specified as a nonnegative integer. 0

represents an object of an unknown or unassigned class. |

Position | Position of actor, specified as a real-valued vector of the form [x y z]. Units are in meters. |

Velocity | Velocity (v) of actor in the x-, y-, and z-directions, specified as a real-valued vector of the form [vx vy vz]. Units are in meters per second. |

Roll | Roll angle of actor, specified as a real scalar. Units are in degrees. |

Pitch | Pitch angle of actor, specified as a real scalar. Units are in degrees. |

Yaw | Yaw angle of actor, specified as a real scalar. Units are in degrees. |

AngularVelocity | Angular velocity (ω) of actor in the x-, y-, and z-directions, specified as a real-valued vector of the form [ωx ωy ωz]. Units are in degrees per second. |

For full definitions of these structure fields, see the actor and vehicle functions.

More About

In a driving scenario, you can specify one actor as the observer of all other actors, similar to how the driver of a car observes all other cars. The observer actor is called the ego actor or, more specifically, the ego vehicle. From the perspective of the ego vehicle, all other actors (such as vehicles and pedestrians) are the observed actors, called targets. Ego vehicle coordinates are centered and oriented with reference to the ego vehicle. The coordinates of the driving scenario are world coordinates.

Version History

Introduced in R2017a

MATLAB Command

You clicked a link that corresponds to this MATLAB command:

Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

웹사이트 선택

번역된 콘텐츠를 보고 지역별 이벤트와 혜택을 살펴보려면 웹사이트를 선택하십시오. 현재 계신 지역에 따라 다음 웹사이트를 권장합니다:

또한 다음 목록에서 웹사이트를 선택하실 수도 있습니다.

사이트 성능 최적화 방법

최고의 사이트 성능을 위해 중국 사이트(중국어 또는 영어)를 선택하십시오. 현재 계신 지역에서는 다른 국가의 MathWorks 사이트 방문이 최적화되지 않았습니다.

미주

- América Latina (Español)

- Canada (English)

- United States (English)

유럽

- Belgium (English)

- Denmark (English)

- Deutschland (Deutsch)

- España (Español)

- Finland (English)

- France (Français)

- Ireland (English)

- Italia (Italiano)

- Luxembourg (English)

- Netherlands (English)

- Norway (English)

- Österreich (Deutsch)

- Portugal (English)

- Sweden (English)

- Switzerland

- United Kingdom (English)