average

Compute performance metrics for average receiver operating characteristic (ROC) curve in multiclass problem

Since R2022b

Syntax

Description

[

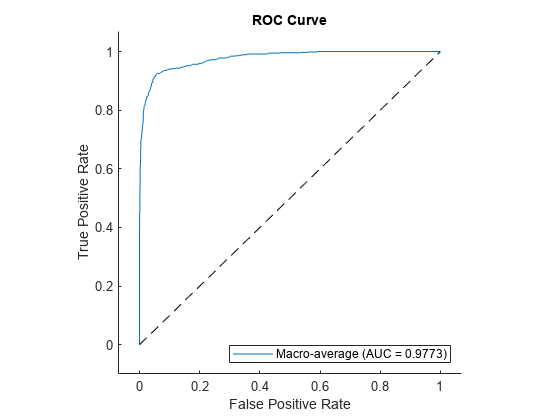

computes the averages of performance metrics stored in the FPR,TPR,Thresholds,AUC] = average(rocObj,type)rocmetrics object

rocObj for a multiclass classification problem using the averaging

method specified in type. The function returns the average false

positive rate (FPR) and the average true positive rate

(TPR) for each threshold value in Thresholds.

The function also returns AUC, the area under the ROC curve composed of

FPR and TPR.

[

computes the performance metrics and returns avg1,avg2,Thresholds,AUC] = average(rocObj,type,metric1,metric2)avg1 (the average of

metric1) and avg2 (the average of

metric2) in addition to Thresholds, the

corresponding threshold for each of the average values, and AUC, the AUC

of the curve generated by metric1 and metric2. (since R2024a)

average supports the AUC output only when

metric1 and metric2 are TPR and FPR, or instead

are precision and recall:

TPR and FPR — Specify TPR using

"TruePositiveRate","tpr", or"recall", and specify FPR using"FalsePositiveRate"or"fpr". These choices specify that AUC is a ROC curve.Precision and recall — Specify precision using

"PositivePredictiveValue","ppv","prec", or"precision", and specify recall using"TruePositiveRate","tpr", or"recall". These choices specify that AUC is the area under a precision-recall curve.

Examples

Input Arguments

Output Arguments

More About

Algorithms

Alternative Functionality

You can use the

plotfunction to create the average ROC curve. The function returns aROCCurveobject containing theXData,YData,Thresholds, andAUCproperties, which correspond to the output argumentsFPR,TPR,Thresholds, andAUCof theaveragefunction, respectively. For an example, see Plot ROC Curve.