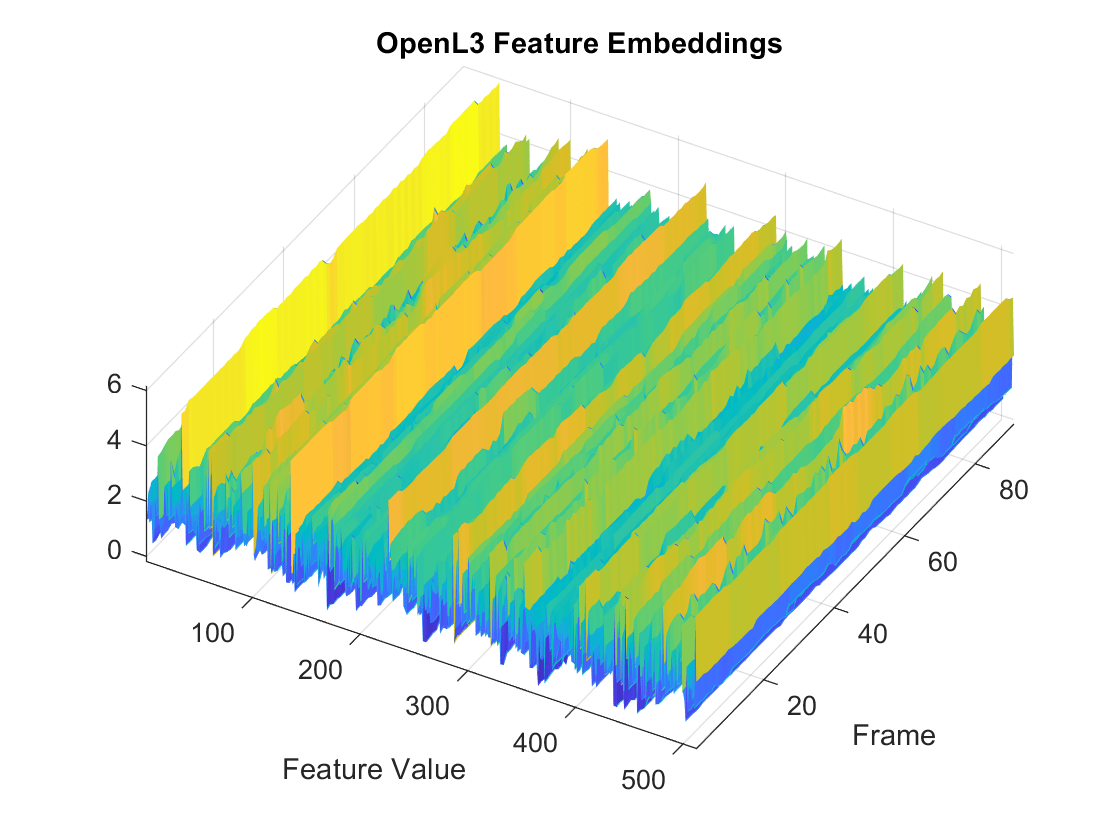

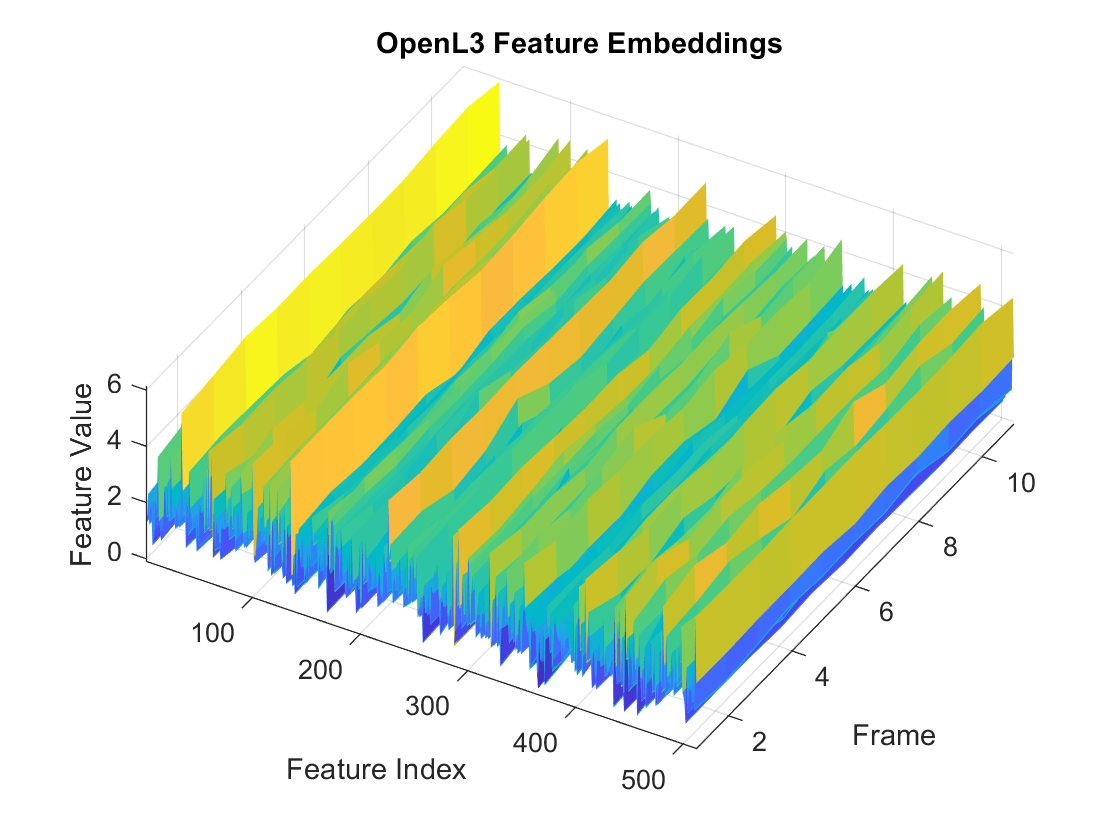

openl3Embeddings

Description

embeddings = openl3Embeddings(audioIn,fs)audioIn

with sample rate fs. Columns of the input are treated as individual

channels.

embeddings = openl3Embeddings(audioIn,fs,Name=Value)embeddings

= openl3Embeddings(audioIn,fs,OverlapPercentage=75) applies a 75% overlap

between consecutive frames used to create the audio embeddings.

This function requires both Audio Toolbox™ and Deep Learning Toolbox™.

Examples

Input Arguments

Name-Value Arguments

Output Arguments

References

[1] Cramer, Jason, et al. "Look, Listen, and Learn More: Design Choices for Deep Audio Embeddings." In ICASSP 2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), IEEE, 2019, pp. 3852-56. DOI.org (Crossref), doi:/10.1109/ICASSP.2019.8682475.

Extended Capabilities

Version History

Introduced in R2022a