trainWordEmbedding

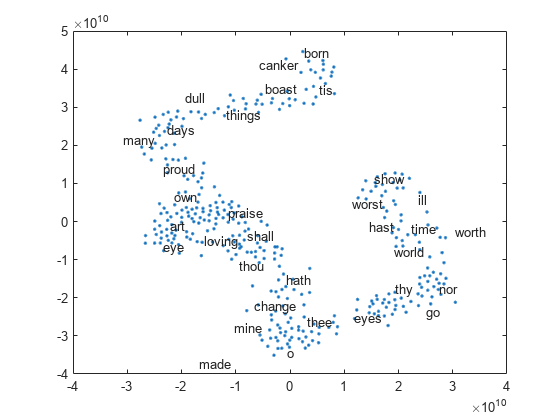

Train word embedding

Syntax

Description

emb = trainWordEmbedding(___,Name,Value)'Dimension',50 specifies the word embedding dimension to

be 50.

Examples

Input Arguments

Name-Value Arguments

Output Arguments

More About

Tips

The training algorithm uses the number of threads given by the function

maxNumCompThreads. To learn how to change the number of threads

used by MATLAB®, see maxNumCompThreads.

References

[1] Bojanowsku, P., E. Grave, A. Joulin, T. Mikolov. "Enriching Word Vectors with Subword Information." Transactions of the Association for Computational Linguistics 5 135-146, 2017. https://doi.org/10.1162/tacl_a_00051.

Version History

Introduced in R2017b