traincgp

Conjugate gradient backpropagation with Polak-Ribiére updates

Syntax

net.trainFcn = 'traincgp'

[net,tr] = train(net,...)

Description

traincgp is a network training function that updates weight and bias

values according to conjugate gradient backpropagation with Polak-Ribiére updates.

net.trainFcn = 'traincgp' sets the network trainFcn

property.

[net,tr] = train(net,...) trains the network with

traincgp.

Training occurs according to traincgp training parameters, shown here

with their default values:

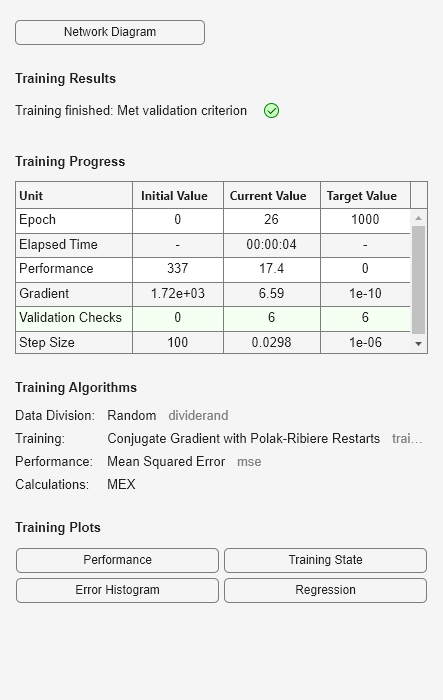

net.trainParam.epochs | 1000 | Maximum number of epochs to train |

net.trainParam.show | 25 | Epochs between displays ( |

net.trainParam.showCommandLine | false | Generate command-line output |

net.trainParam.showWindow | true | Show training GUI |

net.trainParam.goal | 0 | Performance goal |

net.trainParam.time | inf | Maximum time to train in seconds |

net.trainParam.min_grad | 1e-10 | Minimum performance gradient |

net.trainParam.max_fail | 6 | Maximum validation failures |

net.trainParam.searchFcn | 'srchcha' | Name of line search routine to use |

Parameters related to line search methods (not all used for all methods):

net.trainParam.scal_tol | 20 | Divide into |

net.trainParam.alpha | 0.001 | Scale factor that determines sufficient reduction in

|

net.trainParam.beta | 0.1 | Scale factor that determines sufficiently large step size |

net.trainParam.delta | 0.01 | Initial step size in interval location step |

net.trainParam.gama | 0.1 | Parameter to avoid small reductions in performance, usually set to

|

net.trainParam.low_lim | 0.1 | Lower limit on change in step size |

net.trainParam.up_lim | 0.5 | Upper limit on change in step size |

net.trainParam.maxstep | 100 | Maximum step length |

net.trainParam.minstep | 1.0e-6 | Minimum step length |

net.trainParam.bmax | 26 | Maximum step size |

Network Use

You can create a standard network that uses traincgp with

feedforwardnet or cascadeforwardnet. To prepare a custom

network to be trained with traincgp,

Set

net.trainFcnto'traincgp'. This setsnet.trainParamtotraincgp’s default parameters.Set

net.trainParamproperties to desired values.

In either case, calling train with the resulting network trains the

network with traincgp.

Examples

More About

Algorithms

traincgp can train any network as long as its weight, net input, and

transfer functions have derivative functions.

Backpropagation is used to calculate derivatives of performance perf

with respect to the weight and bias variables X. Each variable is adjusted

according to the following:

X = X + a*dX;

where dX is the search direction. The parameter a is

selected to minimize the performance along the search direction. The line search function

searchFcn is used to locate the minimum point. The first search direction is

the negative of the gradient of performance. In succeeding iterations the search direction is

computed from the new gradient and the previous search direction according to the formula

dX = -gX + dX_old*Z;

where gX is the gradient. The parameter Z can be

computed in several different ways. For the Polak-Ribiére variation of conjugate gradient,

it is computed according to

Z = ((gX - gX_old)'*gX)/norm_sqr;

where norm_sqr is the norm square of the previous gradient, and

gX_old is the gradient on the previous iteration. See page 78 of Scales

(Introduction to Non-Linear Optimization, 1985) for a more detailed

discussion of the algorithm.

Training stops when any of these conditions occurs:

The maximum number of

epochs(repetitions) is reached.The maximum amount of

timeis exceeded.Performance is minimized to the

goal.The performance gradient falls below

min_grad.Validation performance (validation error) has increased more than

max_failtimes since the last time it decreased (when using validation).

References

Scales, L.E., Introduction to Non-Linear Optimization, New York, Springer-Verlag, 1985

Version History

Introduced before R2006a