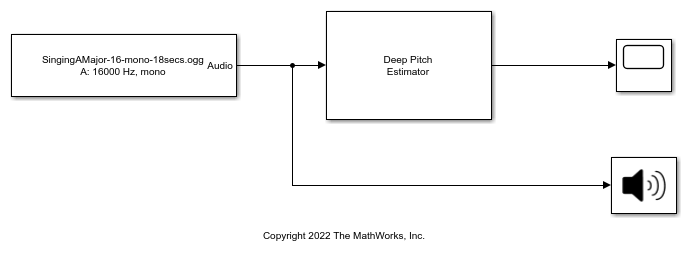

Deep Pitch Estimator

Libraries:

Audio Toolbox /

Deep Learning

Description

The Deep Pitch Estimator block uses a CREPE pretrained neural network to estimate the pitch from audio signals. The block combines necessary audio preprocessing, network inference, and postprocessing of network output to return pitch estimations in Hz. This block requires Deep Learning Toolbox™.

Examples

Ports

Input

Output

Parameters

Block Characteristics

Data Types |

|

Direct Feedthrough |

|

Multidimensional Signals |

|

Variable-Size Signals |

|

Zero-Crossing Detection |

|

References

[1] Kim, Jong Wook, Justin Salamon, Peter Li, and Juan Pablo Bello. “Crepe: A Convolutional Representation for Pitch Estimation.” In 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 161–65. Calgary, AB: IEEE, 2018. https://doi.org/10.1109/ICASSP.2018.8461329.

Extended Capabilities

Version History

Introduced in R2023a