학습자의 답안 테스트하기

MATLAB® Grader™에서 평가 항목을 만들 때 학습자 답안이 통과 기준을 충족하는지 평가하는 테스트를 정의하십시오.

테스트 정의하기

테스트를 정의하려면 테스트 유형 목록에 제공되는 미리 정의된 테스트를 사용하거나 직접 MATLAB 코드를 작성할 수 있습니다. 미리 정의된 테스트 옵션에는 다음이 포함됩니다.

Variable Equals Reference Solution— 변수의 존재 여부, 데이터형, 크기, 값을 확인합니다. 이 옵션은 스크립트 평가 항목의 테스트 유형 목록에서만 사용할 수 있습니다. 숫자형 값에는1e-4의 디폴트 허용오차가 적용됩니다.Function or Keyword is Present— 지정된 함수나 키워드가 있는지 확인합니다.Function or Keyword is Absent— 학습자 답안에 지정된 함수나 키워드가 포함되어 있지 않은지 확인합니다.

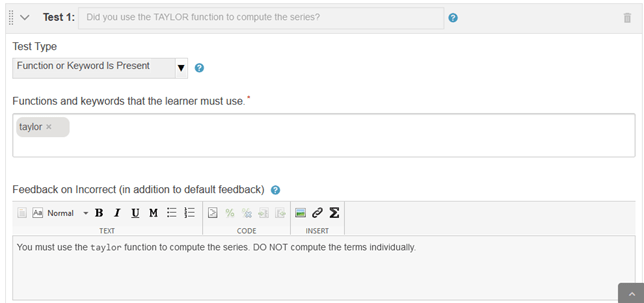

예를 들어 학습자가 taylor 함수를 사용하여 주어진 함수의 테일러 급수 근사를 계산하도록 하려면 다음 옵션을 지정합니다.

테스트 유형 목록에서

Function or Keyword is Present를 선택합니다.taylor함수를 학습자가 사용해야 할 함수로 지정합니다.선택적으로, 학습자가 예상 함수를 사용하지 않을 경우 표시될 추가 피드백을 지정합니다.

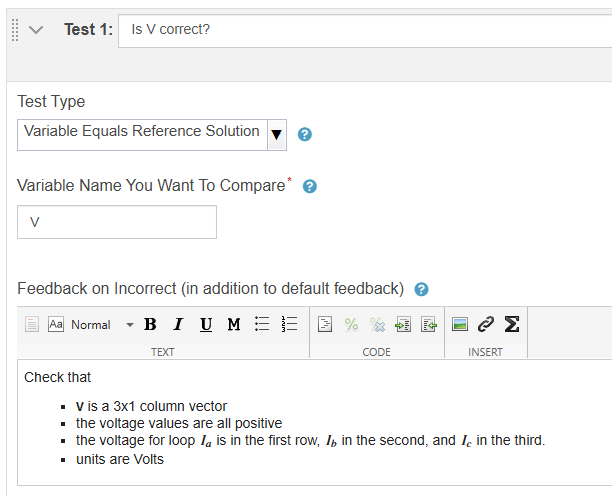

스크립트 평가 항목의 경우 미리 정의된 테스트 유형인 Variable Equals Reference Solution을 사용하여 변수를 평가할 수 있습니다. 예를 들어 변수를 모범 답안에 있는 동일한 이름의 변수와 비교하여 변수의 크기가 올바르고 값과 데이터형이 예상과 같은지 평가할 수 있습니다.

테스트 유형 목록에서 MATLAB Code 옵션을 선택하여 MATLAB 코드를 작성할 수도 있습니다. 다음 지침을 따르십시오.

정답 여부 평가 — 미리 정의된 테스트 유형에 대응하는 함수인

assessVariableEqual,assessFunctionPresence,assessFunctionAbsence를 사용할 수 있습니다. 또는 잘못된 결과에 대해 오류를 반환하는 사용자 지정 테스트를 작성할 수도 있습니다.스크립트 평가 항목의 변수 이름 — 모범 답안의 변수를 참조하려면 접두사

referenceVariables를 추가하십시오(예:referenceVariables.). 학습자 변수를 참조하려면 변수 이름을 사용하십시오.myvar함수 평가 항목의 함수 이름 — 평가 테스트의 모범 답안 함수를 호출하려면 접두사

reference를 추가하십시오(예:reference.). 학습자 함수를 호출하려면 함수 이름을 사용하십시오.myfunction변수 범위 – 테스트 코드 내에서 생성한 변수는 평가 테스트 내부에만 존재합니다.

예를 들어 학습자가 디폴트 허용오차를 벗어날 수 있는 변수 X의 값을 계산해야 한다고 가정하겠습니다. 학습자 답안의 값과 모범 답안의 값을 비교하려면 assessVariableEqual 함수를 호출하여 변수와 허용오차를 지정합니다.

함수 평가 항목을 테스트하려면 테스트 입력값으로 학습자 함수와 참조 함수를 호출하고 출력값을 비교합니다. 예를 들어 다음 코드는 tempF2C라는 학습자 함수가 온도를 화씨에서 섭씨로 올바르게 변환하는지 확인합니다.

temp = 78;

tempC = tempF2C(temp);

expectedTemp = reference.tempF2C(temp);

assessVariableEqual('tempC',expectedTemp);

팁

assessVariableEqual 함수의 디폴트 오류 메시지에는 테스트한 변수의 이름(예: 이전 예제의 X 및 tempC)이 포함되어 있습니다. 함수 평가 항목의 경우 이 변수는 학습자 코드가 아닌 평가 테스트 스크립트에 있습니다. 함수 선언의 출력값과 같이 학습자가 쉽게 인식할 수 있는 의미 있는 이름을 사용하십시오.

하나의 평가 테스트에 여러 개의 테스트가 포함될 수 있습니다. 예를 들어 다음 코드는 학습자가 0과 0이 아닌 값을 모두 올바르게 처리하는 normsinc라는 함수를 구현하는지 확인합니다. 값이 0인 경우에는 assessVariableEqual 함수의 Feedback 이름-값 인수를 사용하여 학습자에게 추가 피드백이 제공됩니다.

nonzero = 0.25*randi([1 3]); y_nonzero = normsinc(nonzero); expected_y_nonzero = reference.normsinc(nonzero); assessVariableEqual('y_nonzero',expected_y_nonzero); y_zero = normsinc(0); expected_y_zero = reference.normsinc(0); assessVariableEqual('y_zero',expected_y_zero, ... Feedback='Inputs of 0 should return 1. Consider an if-else statement or logical indexing.');

부분 점수 부여하기

학습자가 제출한 답안은 기본적으로 모든 테스트를 통과해야 정답으로 간주되고 테스트가 하나라도 실패하면 오답으로 간주됩니다. 부분 점수를 부여하려면 채점 방식을 가중치 적용으로 변경하여 테스트에 상대 가중치를 할당하십시오. MATLAB Grader는 상대 가중치의 총계를 기준으로 각 테스트의 비율을 계산하므로 가중치를 점수나 백분율로 정의할 수 있습니다.

예를 들어 모든 테스트의 상대 가중치를 1로 설정하면 각 테스트에 동일한 가중치가 부여됩니다. 일부 가중치를 2로 설정하면 가중치가 1로 설정된 테스트보다 2배 더 높은 가중치가 해당 테스트에 부여됩니다.

가중치를 백분율로 입력할 수도 있습니다.

사전 테스트 지정하기

사전 테스트는 학습자가 채점을 위해 답안을 제출하기 전에 답안이 올바른 방향으로 가고 있는지 확인하기 위해 실행할 수 있는 평가 테스트입니다. 올바른 접근 방식이 여러 개 있지만 테스트에 특정 접근 방식이 필요하거나 과제에 제출 횟수 제한을 설정한 경우 사전 테스트를 사용하여 학습자를 안내하는 것이 좋습니다.

사전 테스트는 다음과 같은 점에서 일반 평가 테스트와 다릅니다.

사전 테스트 결과는 제출 전에 성적표에 기록되지 않습니다.

사전 테스트 실행은 제출 횟수 제한에 포함되지 않습니다.

학습자는 테스트 통과 또는 실패 여부에 관계없이 사전 테스트 코드 및 세부 정보(MATLAB 코드 테스트의 출력값 포함)를 볼 수 있습니다. 사전 테스트에 평가 항목 답안이 포함되어 있지 않은지 확인하십시오.

일반 평가 테스트와 마찬가지로 사전 테스트는 학습자가 답안을 제출하면 실행되며 최종 성적에 반영됩니다.

예를 들어 학습자가 여러 가지 방법으로 구성할 수 있는 선형 연립방정식을 정의해야 한다고 가정하겠습니다. 학습자 답안의 차수와 계수가 예상과 같은지 확인하기 위한 사전 테스트를 정의합니다.

학습자는 통과하지 못한 테스트에 대한 오류를 살펴보고 답안을 수정할 수 있습니다.

스크립트 평가 항목의 오류 표시 제어하기

스크립트 평가 항목에서는 초기 오류가 후속 오류를 발생시킬 수 있습니다. 학습자가 먼저 초기 오류에 집중하도록 유도할 수 있습니다.

초기 오류에 대한 피드백만 표시 옵션을 선택하면 기본적으로 초기 오류에 대한 자세한 피드백이 표시되지만 후속 오류에 대한 세부 정보는 숨겨집니다. 학습자는 피드백 표시를 클릭하여 이 추가 피드백을 표시할 수 있습니다.