Deep Learning Toolbox는 알고리즘, 사전 훈련된 모델 및 앱을 사용하여 심층 신경망을 설계 및 구현하는 프레임워크를 제공합니다. CNN(ConvNet, 컨벌루션 신경망) 및 LSTM(장단기 기억) 신경망을 사용하여 영상, 시계열 및 텍스트 데이터에 대한 분류 및 회귀를 수행할 수 있습니다. 자동 미분, 사용자 지정 훈련 루프 및 공유 가중치를 사용하여 GAN(생성적 적대 신경망) 및 샴 신경망과 같은 신경망 아키텍처를 구축할 수 있습니다. 심층 신경망 디자이너 앱을 사용하여 신경망을 시각적으로 설계, 분석 및 훈련시킬 수 있습니다. 실험 관리자 앱을 사용하면 여러 딥러닝 실험을 관리하고, 훈련 파라미터를 추적하고, 결과를 분석하고, 서로 다른 실험의 코드를 비교할 수 있습니다. 계층 활성화를 시각화하고 훈련 진행 상황을 시각적으로 모니터링할 수 있습니다.

TensorFlow™ 2, TensorFlow-Keras, PyTorch®, ONNX™(Open Neural Network Exchange) 모델 형식, Caffe로부터 신경망 및 계층 그래프를 가져올 수 있습니다. Deep Learning Toolbox 신경망 및 계층 그래프를 TensorFlow 2 및 ONNX 모델 형식으로 내보낼 수도 있습니다. 또한 DarkNet-53, ResNet-50, NASNet, SqueezeNet 및 기타 많은 사전 훈련된 모델에 대한 전이 학습을 지원합니다.

Parallel Computing Toolbox를 사용하여 단일 또는 다중 GPU 워크스테이션에서 훈련의 속도를 높이거나 MATLAB Parallel Server를 사용하여 NVIDIA®GPU Cloud 및 Amazon EC2®GPU 인스턴스 등의 클러스터와 클라우드로 확장할 수 있습니다.

딥러닝 응용 분야

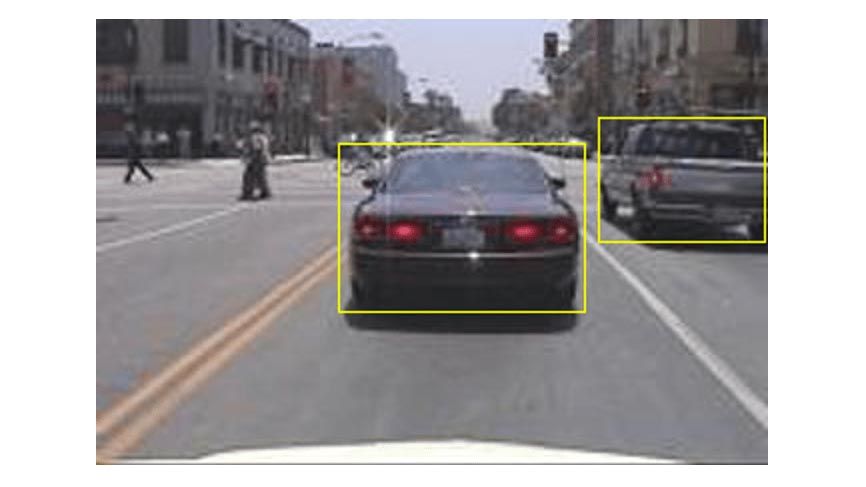

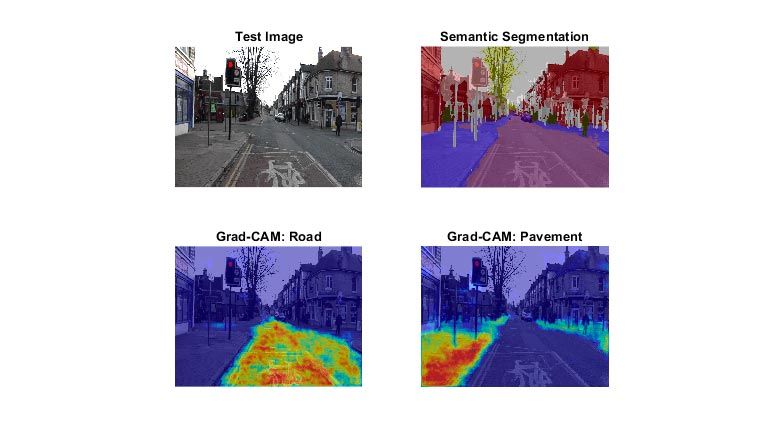

자율주행, 신호 및 오디오 처리, 무선 통신, 영상 처리 등의 응용 사례에 대한 분류, 회귀, 특징 학습을 위해 딥러닝 모델을 훈련시킬 수 있습니다.

신경망 설계 및 모델 관리

로우코드 앱을 사용하여 딥러닝 모델 개발을 가속화할 수 있습니다. 심층 신경망 디자이너 앱을 사용하여 신경망을 생성, 훈련, 분석 및 디버그할 수 있습니다. 실험 관리자 앱을 사용하여 여러 모델을 조정하고 비교할 수 있습니다.

사전 훈련된 모델

MATLAB에서 단 한 줄의 코드로 널리 사용되는 모델에 액세스할 수 있습니다. ONNX 및 TensorFlow™를 통해 PyTorch™를 사용하여 어떤 모델이든 MATLAB으로 가져올 수 있습니다.

코드 생성

GPU Coder를 사용하여 최적화된 CUDA® 코드를 자동으로 생성하고 MATLAB Coder를 사용하여 C 및 C++ 코드를 생성하여 딥러닝 신경망을 NVIDIA GPU 및 프로세서에 배포할 수 있습니다. Deep Learning HDL Toolbox를 사용하여 FPGA 및 SoC에 딥러닝 신경망을 프로토타이핑하고 구현할 수 있습니다.

Simulink를 사용한 시뮬레이션

제어, 신호 처리, 센서 융합 구성요소로 딥러닝 신경망을 시뮬레이션하여 딥러닝 모델이 시스템 수준 성능에 미치는 영향을 평가할 수 있습니다.

딥러닝 압축

딥러닝 신경망을 양자화하고 가지치기하여 메모리 사용량을 줄이고 추론 성능을 향상시킬 수 있습니다. 심층 신경망 양자화기 앱을 사용하여 향상된 성능과 추론 정확도 사이의 장단점을 분석하고 시각화할 수 있습니다.