Accelerating Simulink Simulations in Continuous Integration Workflows with Simulink Cache Files

By Puneet Khetarpal, Marco Dragic, and Govind Malleichervu, MathWorks

In an Agile development workflow, designing complex systems is a collaborative effort in which large teams develop components, assemble subsystems, and integrate them into the system design. Ideally, system simulation is an integral verification step in the component design workflow, enabling engineers to verify that components meet system requirements. However, simulating a system with a complex model hierarchy many times can be prohibitively time-consuming.

One way that Simulink® accelerates simulations of large model reference hierarchies is by creating a set of intermediate derived artifacts the first time a simulation is run. For large teams, sharing and reusing these derived files, which include MEX files and other binary files, can be challenging. Consequently, team members frequently spend time rebuilding and re-creating files already created by others on the team. This redundant effort consumes time that could otherwise be spent on more productive design activities. The larger the team and the greater the complexity of the model, the greater the problem.

To address this issue, Simulink packages and stores these derived artifacts in Simulink cache files. In this article, we describe a method for managing and sharing Simulink cache files in a typical Agile development workflow that uses Git™ for source control and Jenkins™ for continuous integration (CI). This approach considerably speeds up system simulations.

The Simulink Cache

When you simulate a model in accelerator, rapid accelerator, or model reference accelerated mode, Simulink packages the derived files for each model in the hierarchy into its corresponding Simulink cache file (SLXC). Team members can share these SLXC files and the corresponding Simulink model files with each other. When a team member repeats the simulation on their machine, Simulink extracts the necessary derived files from the SLXC file for each model. As a result, Simulink does not need to perform unnecessary rebuilds, and the simulation completes significantly faster.

The exact performance improvement depends on several factors, such as the number of models in the hierarchy, the model reference rebuild setting, the number of blocks in the referenced models, and the size and number of derived files created for each model. In our tests with a variety of system models with 0–500 referenced models and 1–10 levels of hierarchy, we saw improvements that ranged from 2x to more than 34x (Figure 1).

Figure 1. Performance improvements achieved by using Simulink cache files for various system models.

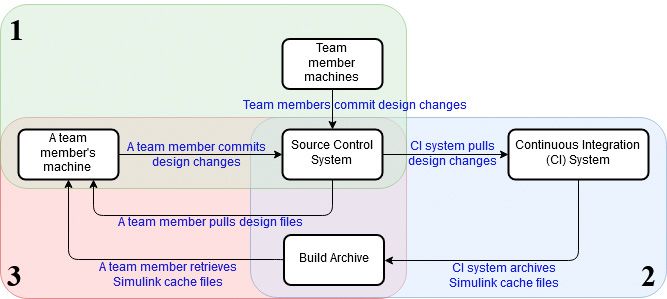

Sharing and reusing Simulink cache files in an Agile workflow that incorporates the Jenkins CI system is a three-stage process (Figure 2):

- Committing design changes to Git.

- Integrating design changes and archiving SLXC files.

- Jenkins pulls design changes from Git and runs simulations to test them.

- Jenkins saves the Simulink cache files in the Jenkins build archive.

- Syncing design changes and SLXC files.

- Team members sync the latest design changes from Git and the associated cache files from the Jenkins build archive.

- Team members run system simulations using the cache files.

Figure 2. A typical workflow for reusing Simulink cache files with source control and continuous integration systems. The shaded regions describe the three stages of the workflow.

Before looking at these stages in more detail, let's consider the requirements and best practices for working with Simulink cache.

Requirements and Best Practices for Sharing and Reusing Simulink Cache Files

The Simulink cache contains derived files that depend on the MATLAB release, platform, and compiler used during the simulation. To share and reuse these files, all team members must use the same MATLAB® release, platform, and compiler. In this article we are using MATLAB R2019a, Microsoft® Windows®, and Microsoft Visual C++® 2017, respectively.

The following best practices make it easier to accelerate simulations of large hierarchical models by reusing shared cache files:

- Follow the component-based modeling guidelines appropriate for your model.

- Make each team member responsible for a subset of the hierarchy—typically, a few Simulink models making up a component, such as a controller or plant with a well-defined interface. This minimizes issues when merging design changes.

- Use projects with startup and shutdown scripts to ensure a consistent working environment for all team members. The startup script initializes the environment when the project is opened, and the shutdown script cleans up the environment when the project is closed.

- Reference all models in Accelerator mode. For debugging a referenced model on a local machine, use Normal mode. However, this mode does not provide the same simulation performance benefits as Accelerator mode.

- Set each model’s Rebuild parameter to If any changes in known dependencies detected (Figure 3) and use the Model dependencies parameter to specify user-created dependencies. This improves rebuild detection speed and accuracy.

- If you are using Parallel Computing Toolbox™, select Enable parallel model reference builds (Figure 3). The model reference hierarchy will then automatically build in parallel.

- Decide whether Jenkins builds will be run on every commit, at a specific time of day, or on demand. Your specific requirements will determine what timing works best and which of the following types of build to use:

- Sterile: A build in which the Jenkins workspace is cleared of artifacts or files from the previous Jenkins build. Use a sterile build if your team changes Simulink model configurations frequently. For example, changing the hardware settings on the Simulink model hierarchy requires a clean workspace.

- Incremental: A build in which the artifacts from the previous Jenkins build are retained. Use an incremental build if your team makes small incremental changes to individual components.

Sterile builds usually take longer than incremental builds, depending on the size of the model hierarchy.

Figure 3. Model configuration parameters.

Committing Design Changes to Git

In this step, team members modify models, simulate the model hierarchy, run Model Advisor checks, and conduct unit tests. They commit only their design files to a source control system such as Git. Simulink cache files should not be committed to source control systems; they are derived binary files that can take significant disk space and cannot be compared or merged. In a Git repository, you can configure a .gitignore file so that Git ignores all derived artifacts, including SLXC files.

Integrating Design Changes and Archiving SLXC Files

For guidance and configuration tips on using Jenkins with Simulink, see this technical article

Continuous Integration for Verification of Simulink Models.

In the rest of this article, we use the Workflow Example for Simulink Cache and Jenkins.

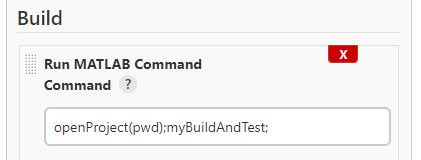

In this example, the Jenkins administrator specifies the Build command in the MATLAB Jenkins Plugin. This command launches MATLAB, builds the accelerator target, generates production C code for an embedded real-time target controller and runs software-in-the-loop (SIL) equivalence tests. Figure 4 shows a command that opens the Simulink Project and executes the myBuildAndTest script.

Figure 4. An illustration of a Jenkins Build command, which opens the Simulink project and executes the myBuildAndTest script. The script builds the accelerator target for the system, generates production C code for an embedded real-time target controller and runs multiple simulations to test the changes.

As part of simulation and code generation, Simulink creates SLXC files that contain the accelerator and model reference simulation targets for all models in the hierarchy. A subset of these SLXC files contain production C code for embedded real-time controller target. Simulink stores these SLXC files in the simulation cache folder. The location of this folder is specified in the Project Details (Figure 5). The script then runs multiple simulations to test the design changes.

Figure 5. The Project Details dialog box specifying the location of the cache folder where SLXC files are stored. The default setting is [project root].

Additionally, the administrator sets up a Post-build Action in Jenkins to archive the SLXC files (Figure 6). After the build, Jenkins copies the SLXC files from the Jenkins workspace to the build archive location.

To specify the Jenkins build archive location, the administrator can edit the config.xml file in the Jenkins home directory.

Figure 6. A Jenkins postbuild action configured to archive all Simulink cache files from the Jenkins workspace to the build archive area after the build has finished.

Syncing Design Changes and SLXC Files

Typically, Jenkins builds are performed nightly. Each team member can then sync a sandbox based on the last successful build. Team members check out the design changes from Git, retrieve the associated Simulink cache files from the build archive area, and place the Simulink cache files in their simulation cache folders before simulating. Teams can set up a script to automate this process.

In this example, the syncSLXCForCurrentHash script accesses a SQLite database to query for successful builds, finds its corresponding Git commit hash and copies the SLXC files from the archived build area in Jenkins into the simulation cache folder.

Enhancing the Workflow

There are several ways to make this workflow faster and less error prone. To easily manage SLXC files from multiple Jenkins builds, you can use a database or a repository management tool as shown in this example. Finally, to scale simulation performance even further, you can run multiple system simulations using parsim for a range of input parameter values.

Published 2022